Karger's algorithm

In computer science and graph theory, Karger's algorithm is a randomized algorithm to compute a minimum cut of a connected graph.

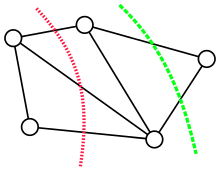

[1] The idea of the algorithm is based on the concept of contraction of an edge

Informally speaking, the contraction of an edge merges the nodes

into one, reducing the total number of nodes of the graph by one.

are "reattached" to the merged node, effectively producing a multigraph.

Karger's basic algorithm iteratively contracts randomly chosen edges until only two nodes remain; those nodes represent a cut in the original graph.

By iterating this basic algorithm a sufficient number of times, a minimum cut can be found with high probability.

The size (or weight) of a cut in an unweighted graph is the cardinality of the cutset, i.e., the number of edges between the two parts, There are

cut” for a given pair of vertices, which has the additional requirement that

Thus, the minimum cut problem can be solved in polynomial time by iterating over all choices of

cut problem using the max-flow min-cut theorem and a polynomial time algorithm for maximum flow, such as the push-relabel algorithm, though this approach is not optimal.

[2] The fundamental operation of Karger’s algorithm is a form of edge contraction.

When the graph is represented using adjacency lists or an adjacency matrix, a single edge contraction operation can be implemented with a linear number of updates to the data structure, for a total running time of

Alternatively, the procedure can be viewed as an execution of Kruskal’s algorithm for constructing the minimum spanning tree in a graph where the edges have weights

Removing the heaviest edge of this tree results in two components that describe a cut.

In this way, the contraction procedure can be implemented like Kruskal’s algorithm in time

vertices, the contraction algorithm returns a minimum cut with polynomially small probability

The contraction procedure finds each of these with equal probability.

To further establish the lower bound on the success probability, let

denote the edges of a specific minimum cut of size

if none of the random edges deleted by the algorithm belongs to the cutset

times with independent random choices and returning the smallest cut, the probability of not finding a minimum cut is The total running time for

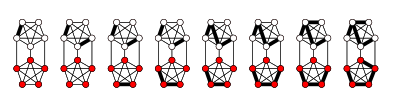

[3] The basic idea is to perform the contraction procedure until the graph reaches

that this contraction procedure avoids a specific cut

This motivates the idea of switching to a slower algorithm after a certain number of contraction steps.

that this random tree of successful calls contains a long-enough path to reach the base of the recursion and find

The running time of fastmincut satisfies with solution

This is an order of magnitude improvement over Karger’s original algorithm.

[3] To determine a min-cut, one has to touch every edge in the graph at least once, which is

The Karger–Stein's min-cut algorithm takes the running time of