Laws of thermodynamics

They state empirical facts that form a basis of precluding the possibility of certain phenomena, such as perpetual motion.

In addition to their use in thermodynamics, they are important fundamental laws of physics in general and are applicable in other natural sciences.

With the exception of non-crystalline solids (glasses), the entropy of a system at absolute zero is typically close to zero.

The laws of thermodynamics are the result of progress made in this field over the nineteenth and early twentieth centuries.

By 1860, as formalized in the works of scientists such as Rudolf Clausius and William Thomson, what are now known as the first and second laws were established.

Gradually, this resolved itself and a zeroth law was later added to allow for a self-consistent definition of temperature.

Some statements go further, so as to supply the important physical fact that temperature is one-dimensional and that one can conceptually arrange bodies in a real number sequence from colder to hotter.

[5][6][7] These concepts of temperature and of thermal equilibrium are fundamental to thermodynamics and were clearly stated in the nineteenth century.

The law allows the definition of temperature in a non-circular way without reference to entropy, its conjugate variable.

(Note, an alternate sign convention, not used in this article, is to define W as the work done on the system by its surroundings):

The First Law encompasses several principles: Combining these principles leads to one traditional statement of the first law of thermodynamics: it is not possible to construct a machine which will perpetually output work without an equal amount of energy input to that machine.

One of the simplest is the Clausius statement, that heat does not spontaneously pass from a colder to a hotter body.

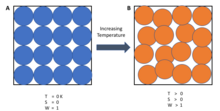

In terms of this quantity it implies that When two initially isolated systems in separate but nearby regions of space, each in thermodynamic equilibrium with itself but not necessarily with each other, are then allowed to interact, they will eventually reach a mutual thermodynamic equilibrium.

Entropy may also be viewed as a physical measure concerning the microscopic details of the motion and configuration of a system, when only the macroscopic states are known.

For two given macroscopically specified states of a system, there is a mathematically defined quantity called the 'difference of information entropy between them'.

This is why entropy increases in natural processes – the increase tells how much extra microscopic information is needed to distinguish the initial macroscopically specified state from the final macroscopically specified state.

With the exception of non-crystalline solids (e.g. glass) the residual entropy of a system is typically close to zero.

These relations are derived from statistical mechanics under the principle of microscopic reversibility (in the absence of external magnetic fields).