Light field

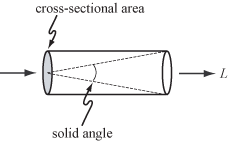

The radiance along all such rays in a region of three-dimensional space illuminated by an unchanging arrangement of lights is called the plenoptic function.

In computer graphics, this vector-valued function of 3D space is called the vector irradiance field.

Time, wavelength, and polarization angle can be treated as additional dimensions, yielding higher-dimensional functions, accordingly.

However, for locations outside the object's convex hull (e.g., shrink-wrap), the plenoptic function can be measured by capturing multiple images.

In this case the function contains redundant information, because the radiance along a ray remains constant throughout its length.

Because light field provides spatial and angular information, we can alter the position of focal planes after exposure, which is often termed refocusing.

The principle of refocusing is to obtain conventional 2-D photographs from a light field through the integral transform.

The transform takes a lightfield as its input and generates a photograph focused on a specific plane.

In practice, this formula cannot be directly used because a plenoptic camera usually captures discrete samples of the lightfield

[9] One way to reduce the complexity of computation is to adopt the concept of Fourier slice theorem:[9] The photography operator

More precisely, a refocused image can be generated from the 4-D Fourier spectrum of a light field by extracting a 2-D slice, applying an inverse 2-D transform, and scaling.

Another way to efficiently compute 2-D photographs is to adopt discrete focal stack transform (DFST).

[10] DFST is designed to generate a collection of refocused 2-D photographs, or so-called Focal Stack.

The algorithm consists of these steps: In computer graphics, light fields are typically produced either by rendering a 3D model or by photographing a real scene.

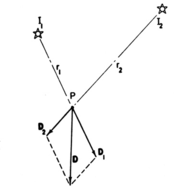

In either case, to produce a light field, views must be obtained for a large collection of viewpoints.

A light field capture of Michelangelo's statue of Night[18] contains 24,000 1.3-megapixel images, which is considered large as of 2022.

For light field rendering to completely capture an opaque object, images must be taken of at least the front and back.

[25] Depending on the parameterization of the light field and slices, these views might be perspective, orthographic, crossed-slit,[26] general linear cameras,[27] multi-perspective,[28] or another type of projection.

Integrating an appropriate 4D subset of the samples in a light field can approximate the view that would be captured by a camera having a finite (i.e., non-pinhole) aperture.

Shearing or warping the light field before performing this integration can focus on different fronto-parallel[29] or oblique[30] planes.

Presenting a light field using technology that maps each sample to the appropriate ray in physical space produces an autostereoscopic visual effect akin to viewing the original scene.

[31] Modern approaches to light-field display explore co-designs of optical elements and compressive computation to achieve higher resolutions, increased contrast, wider fields of view, and other benefits.

[32] Neural activity can be recorded optically by genetically encoding neurons with reversible fluorescent markers such as GCaMP that indicate the presence of calcium ions in real time.

Since light field microscopy captures full volume information in a single frame, it is possible to monitor neural activity in individual neurons randomly distributed in a large volume at video framerate.

[36][42] Image generation and predistortion of synthetic imagery for holographic stereograms is one of the earliest examples of computed light fields.

[43] Glare arises due to multiple scattering of light inside the camera body and lens optics that reduces image contrast.

[45] Statistically analyzing the ray-space inside a camera allows the classification and removal of glare artifacts.

In ray-space, glare behaves as high frequency noise and can be reduced by outlier rejection.

Such analysis can be performed by capturing the light field inside the camera, but it results in the loss of spatial resolution.

Uniform and non-uniform ray sampling can be used to reduce glare without significantly compromising image resolution.