Communication protocol

The protocol defines the rules, syntax, semantics, and synchronization of communication and possible error recovery methods.

The ITU-T handles telecommunications protocols and formats for the public switched telephone network (PSTN).

Under the direction of Donald Davies, who pioneered packet switching at the National Physical Laboratory in the United Kingdom, it was written by Roger Scantlebury and Keith Bartlett for the NPL network.

[5][6][7][8][9] On the ARPANET, the starting point for host-to-host communication in 1969 was the 1822 protocol, written by Bob Kahn, which defined the transmission of messages to an IMP.

[10] The Network Control Program (NCP) for the ARPANET, developed by Steve Crocker and other graduate students including Jon Postel and Vint Cerf, was first implemented in 1970.

[17] Research in the early 1970s by Bob Kahn and Vint Cerf led to the formulation of the Transmission Control Program (TCP).

[18] Its RFC 675 specification was written by Cerf with Yogen Dalal and Carl Sunshine in December 1974, still a monolithic design at this time.

[19] Separate international research, particularly the work of Rémi Després, contributed to the development of the X.25 standard, based on virtual circuits, which was adopted by the CCITT in 1976.

For a period in the late 1980s and early 1990s, engineers, organizations and nations became polarized over the issue of which standard, the OSI model or the Internet protocol suite, would result in the best and most robust computer networks.

[24][25][26] The information exchanged between devices through a network or other media is governed by rules and conventions that can be set out in communication protocol specifications.

At the time the Internet was developed, abstraction layering had proven to be a successful design approach for both compiler and operating system design and, given the similarities between programming languages and communication protocols, the originally monolithic networking programs were decomposed into cooperating protocols.

The immediate human readability stands in contrast to native binary protocols which have inherent benefits for use in a computer environment (such as ease of mechanical parsing and improved bandwidth utilization).

An important aspect of concurrent programming is the synchronization of software for receiving and transmitting messages of communication in proper sequencing.

Underlying this transport layer is a datagram delivery and routing mechanism that is typically connectionless in the Internet.

Layering provides opportunities to exchange technologies when needed, for example, protocols are often stacked in a tunneling arrangement to accommodate the connection of dissimilar networks.

Hardware and operating system independence is enhanced by expressing the algorithms in a portable programming language.

In the absence of standardization, manufacturers and organizations felt free to enhance the protocol, creating incompatible versions on their networks.

Positive exceptions exist; a de facto standard operating system like Linux does not have this negative grip on its market, because the sources are published and maintained in an open way, thus inviting competition.

The IEEE controls many software and hardware protocols in the electronics industry for commercial and consumer devices.

The ITU is an umbrella organization of telecommunication engineers designing the public switched telephone network (PSTN), as well as many radio communication systems.

International standards are reissued periodically to handle the deficiencies and reflect changing views on the subject.

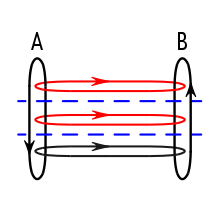

[75] In the OSI model, communicating systems are assumed to be connected by an underlying physical medium providing a basic transmission mechanism.

[citation needed] As a result, the IETF developed its own standardization process based on "rough consensus and running code".

RM/OSI has extended its model to include connectionless services and because of this, both TCP and IP could be developed into international standards.

[89] Unencrypted protocol metadata is one source making up the wire image, and side-channels including packet timing also contribute.

[92] If some portion of the wire image is not cryptographically authenticated, it is subject to modification by intermediate parties (i.e., middleboxes), which can influence protocol operation.

[90] Even if authenticated, if a portion is not encrypted, it will form part of the wire image, and intermediate parties may intervene depending on its content (e.g., dropping packets with particular flags).

[93] The wire image can be deliberately engineered, encrypting parts that intermediaries should not be able to observe and providing signals for what they should be able to.

[92] The IETF announced in 2014 that it had determined that large-scale surveillance of protocol operations is an attack due to the ability to infer information from the wire image about users and their behaviour,[96] and that the IETF would "work to mitigate pervasive monitoring" in its protocol designs;[97] this had not been done systematically previously.

[102][103] Engineering the wire image and controlling what signals are provided to network elements was a "developing field" in 2023, according to the IAB.