PCI Express

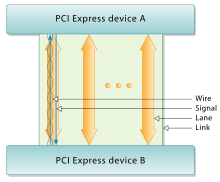

In contrast, PCI Express is based on point-to-point topology, with separate serial links connecting every device to the root complex (host).

When the interface clock period is shorter than the largest time difference between signal arrivals, recovery of the transmitted word is no longer possible.

[citation needed] The advantage is that such slots can accommodate a larger range of PCI Express cards without requiring motherboard hardware to support the full transfer rate.

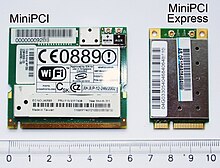

Most laptop computers built after 2005 use PCI Express for expansion cards; however, as of 2015[update], many vendors are moving toward using the newer M.2 form factor for this purpose.

Some notebooks (notably the Asus Eee PC, the Apple MacBook Air, and the Dell mini9 and mini10) use a variant of the PCI Express Mini Card as an SSD.

This variant uses the reserved and several non-reserved pins to implement SATA and IDE interface passthrough, keeping only USB, ground lines, and sometimes the core PCIe x1 bus intact.

[46] This makes the "miniPCIe" flash and solid-state drives sold for netbooks largely incompatible with true PCI Express Mini implementations.

[48] Computer bus interfaces provided through the M.2 connector are PCI Express 3.0 (up to four lanes), Serial ATA 3.0, and USB 3.0 (a single logical port for each of the latter two).

While initially intended for use in laptops for the connection of powerful external GPU boxes, OCuLink's popularity lies primarily in its use for PCIe interconnections in servers, a more prevalent application.

In June 2016 Cadence, PLDA and Synopsys demonstrated PCIe 4.0 physical-layer, controller, switch and other IP blocks at the PCI SIG’s annual developer’s conference.

[83] AMD had hoped to enable partial support for older chipsets, but instability caused by motherboard traces not conforming to PCIe 4.0 specifications made that impossible.

A notable exception, the Sony VAIO Z VPC-Z2, uses a nonstandard USB port with an optical component to connect to an outboard PCIe display adapter.

Mobile PCIe specification (abbreviated to M-PCIe) allows PCI Express architecture to operate over the MIPI Alliance's M-PHY physical layer technology.

At the Draft 0.5 stage, however, there is still a strong likelihood of changes in the actual PCIe protocol layer implementation, so designers responsible for developing these blocks internally may be more hesitant to begin work than those using interface IP from external sources.

At the physical level, PCI Express 2.0 utilizes the 8b/10b encoding scheme[57] (line code) to ensure that strings of consecutive identical digits (zeros or ones) are limited in length.

Line encoding limits the run length of identical-digit strings in data streams and ensures the receiver stays synchronised to the transmitter via clock recovery.

ACK and NAK signals are communicated via DLLPs, as are some power management messages and flow control credit information (on behalf of the transaction layer).

Like other high data rate serial interconnect systems, PCIe has a protocol and processing overhead due to the additional transfer robustness (CRC and acknowledgements).

Long continuous unidirectional transfers (such as those typical in high-performance storage controllers) can approach >95% of PCIe's raw (lane) data rate.

But in more typical applications (such as a USB or Ethernet controller), the traffic profile is characterized as short data packets with frequent enforced acknowledgements.

[132] This type of traffic reduces the efficiency of the link, due to overhead from packet parsing and forced interrupts (either in the device's host interface or the PC's CPU).

As for any network-like communication links, some of the raw bandwidth is consumed by protocol overhead:[133] A PCIe 1.x lane for example offers a data rate on top of the physical layer of 250 MB/s (simplex).

[citation needed] AMD, Nvidia, and Intel have released motherboard chipsets that support as many as four PCIe x16 slots, allowing tri-GPU and quad-GPU card configurations.

Examples include MSI GUS,[139] Village Instrument's ViDock,[140] the Asus XG Station, Bplus PE4H V3.2 adapter,[141] as well as more improvised DIY devices.

[154] Certain data-center applications (such as large computer clusters) require the use of fiber-optic interconnects due to the distance limitations inherent in copper cabling.

Typically, a network-oriented standard such as Ethernet or Fibre Channel suffices for these applications, but in some cases the overhead introduced by routable protocols is undesirable and a lower-level interconnect, such as InfiniBand, RapidIO, or NUMAlink is needed.

Local-bus standards such as PCIe and HyperTransport can in principle be used for this purpose,[155] but as of 2015[update], solutions are only available from niche vendors such as Dolphin ICS, and TTTech Auto.

Other communications standards based on high bandwidth serial architectures include InfiniBand, RapidIO, HyperTransport, Intel QuickPath Interconnect, the Mobile Industry Processor Interface (MIPI), and NVLink.

[citation needed] Delays in PCIe 4.0 implementations led to the Gen-Z consortium, the CCIX effort and an open Coherent Accelerator Processor Interface (CAPI) all being announced by the end of 2016.

The initial promoters of the CXL specification included: Alibaba, Cisco, Dell EMC, Facebook, Google, HPE, Huawei, Intel and Microsoft.

- PCI Express x4

- PCI Express x16

- PCI Express x1

- PCI Express x16

- Conventional PCI (32-bit, 5 V)

white "junction boxes" represent PCI Express device downstream ports, while the gray ones represent upstream ports. [ 6 ] : 7