Pandemonium architecture

It describes the process of object recognition as the exchange of signals within a hierarchical system of detection and association, the elements of which Selfridge metaphorically termed "demons".

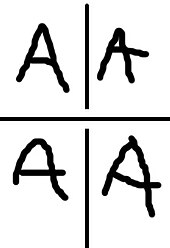

Pandemonium architecture arose in response to the inability of template matching theories to offer a biologically plausible explanation of the image constancy phenomenon.

researchers praise this architecture for its elegancy and creativity; that the idea of having multiple independent systems (e.g., feature detectors) working in parallel to address the image constancy phenomena of pattern recognition is powerful yet simple.

Although not perfect, the pandemonium architecture influenced the development of modern connectionist, artificial intelligence, and word recognition models.

It is easy for this sort of architecture to account for the image constancy phenomena because you only need to "match" at the basic featural level, which is presumed to be limited and finite, thus biologically plausible.

[3] The concept of feature demons, that there are specific neurons dedicated to perform specialized processing is supported by research in neuroscience.

Such as, the most likely error for R should be P. Thus, in order to show this architecture represents the human pattern recognition system we must put these predictions into test.

Also as a result of these experiments, some researchers have proposed models that attempted to list all of the basic features in the Roman alphabet.

[9][10][11][12] A major criticism of the pandemonium architecture is that it adopts a completely bottom-up processing: recognition is entirely driven by the physical characteristics of the targeted stimulus.

Thus we might end up with a system that requires a large number of cognitive demons in order to produce accurate recognition, which would lead to the same biological plausibility criticism of the template matching models.

However, it is rather difficult to judge the validity of this criticism because the pandemonium architecture does not specify how and what features are extracted from incoming sensory information, it simply outlines the possible stages of pattern recognition.

Majority of the research that supports this architecture has often referred to its ability to recognize simple schematic drawings that are selected from a small finite set (e.g., letters in the Roman alphabet).

Evidence from these types of experiments can lead to overgeneralized and misleading conclusions, because the recognition process of complex, three-dimensional patterns could be very different from simple schematics.

However, these criticisms were somewhat addressed when similar results were replicated with other paradigms (e.g., go/no go and same-different tasks), supporting the claim that humans do have elementary feature detectors.

[7] Additionally, some researchers have pointed out that feature accumulation theories like the pandemonium architecture have the processing stages of pattern recognition almost backwards.

This criticism was mainly used by advocates of the global-to-local theory, who argued and provided evidence that perception begins with a blurry view of the whole that refines overtime, implying feature extraction does not happen in the early stages of recognition.

The pandemonium architecture has been applied to solve several real-world problems, such as translating hand-sent Morse codes and identifying hand-printed letters.

This multiple agent approach to human information processing became the assumption for many modern artificial intelligence systems.

This granted pandemonium architectures tremendous power because it is capable of recognizing a stimulus despite its changes in size, style and other transformations; without the presumption of an unlimited pattern memory.