Neural network

[1] Each neuron sends and receives electrochemical signals called action potentials to its connected neighbors.

Signals generated by neural networks in the brain eventually travel through the nervous system and across neuromuscular junctions to muscle cells, where they cause contraction and thereby motion.

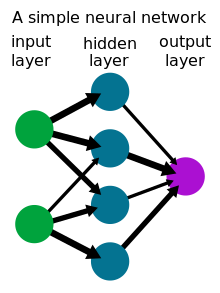

A network is trained by modifying these weights through empirical risk minimization or backpropagation in order to fit some preexisting dataset.

The theoretical base for contemporary neural networks was independently proposed by Alexander Bain in 1873[6] and William James in 1890.

However, starting with the invention of the perceptron, a simple artificial neural network, by Warren McCulloch and Walter Pitts in 1943,[9] followed by the implementation of one in hardware by Frank Rosenblatt in 1957,[3] artificial neural networks became increasingly used for machine learning applications instead, and increasingly different from their biological counterparts.