Parametric search

In the design and analysis of algorithms for combinatorial optimization, parametric search is a technique invented by Nimrod Megiddo (1983) for transforming a decision algorithm (does this optimization problem have a solution with quality better than some given threshold?)

The basic idea of parametric search is to simulate a test algorithm that takes as input a numerical parameter

with other computed values, or by testing the sign of low-degree polynomial functions of

Whenever the simulation reaches a step in which the test algorithm compares its parameter

passed to the decision algorithm is actually equal to the optimal solution value.

When this happens, the decision algorithm can detect the equality and save the optimal solution value for later output.

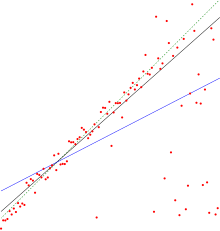

An example considered both by Megiddo (1983) and van Oostrum & Veltkamp (2002) concerns a system of an odd number of particles, all moving rightward at different constant speeds along the same line.

– can be performed by running the same decision algorithm with the crossing time for the particle as its parameter.

However, he explains that parametric search often matches the best known running time or gives the fastest known algorithm for more advanced problems.

As Megiddo (1983) already observed, the parametric search technique can be substantially sped up by replacing the simulated test algorithm by an efficient parallel algorithm, for instance in the parallel random-access machine (PRAM) model of parallel computation, where a collection of processors operate in synchrony on a shared memory, all performing the same sequence of operations on different memory addresses.

By finding a median or near-median value in the set of comparisons that need to be evaluated, and passing this single value to the decision algorithm, it is possible to eliminate half or nearly half of the comparisons with only a single call of the decision algorithm.

The best choice for this algorithm (according to its theoretical analysis, if not in practice) is the sorting network of Ajtai, Komlós, and Szemerédi (1983).

Based on this principle, Cole modifies the simulation of the sorting algorithm, so that it maintains a collection of unresolved simulated comparisons that may not all come from the same parallel time step of the test algorithm.

As in the synchronized parallel version of parametric search, it is possible to resolve half of these comparisons by finding the median comparison value and calling the decision algorithm on this value.

Instead of using the AKS sorting network, it is also possible to combine this technique with a parallel merge sort algorithm of Cole (1988), resulting in time bounds with smaller constant factors that, however, are still not small enough to be practical.

Goodrich & Pszona (2013) combine Cole's theoretical improvement with the practical speedups of van Oostrum & Veltkamp (2002).

As in Cole's technique, they use a desynchronized parametric search, in which each separate thread of execution of the simulated parallel sorting algorithm is allowed to progress until it needs to determine the result of another comparison, and in which the number of unresolved comparisons is halved by finding the median comparison value and calling the decision algorithm with that value.

As they show, the resulting randomized parametric search algorithm makes only a logarithmic number of calls to the decision algorithm with high probability, matching Cole's theoretical analysis.

An extended version of their paper includes experimental results from an implementation of the algorithm, which show that the total running time of this method on several natural geometric optimization problems is similar to that of the best synchronized parametric search implementation (the quicksort-based method of van Oostrum and Veltkamp): a little faster on some problems and a little slower on some others.

However, the number of calls that it makes to the decision algorithm is significantly smaller, so this method would obtain greater benefits in applications of parametric searching where the decision algorithm is slower.

In the case of Goodrich and Pszona, the algorithm is randomized, and obtains this time bound with high probability.

In this method, one maintains an interval of numbers, known to contain the optimal solution value, and repeatedly shrinks the interval by calling the decision algorithm on its median value and keeping only the half-interval above or below the median, depending on the outcome of the call.

Although this method can only find a numerical approximation to the optimal solution value, it does so in a number of calls to the decision algorithm proportional to the number of bits of precision of accuracy for this approximation, resulting in weakly polynomial algorithms.

Instead, when applicable, parametric search finds strongly polynomial algorithms, whose running time is a function only of the input size and is independent of numerical precision.

However, parametric search leads to an increase in time complexity (compared to the decision algorithm) that may be larger than logarithmic.

Because they are strongly rather than weakly polynomial, algorithms based on parametric search are more satisfactory from a theoretical point of view.

Nevertheless, van Oostrum & Veltkamp (2002) write that "while a simple binary-search approach is often advocated as a practical replacement for parametric search, it is outperformed by our [parametric search] algorithm" in the experimental comparisons that they performed.

When only concerned with expected performance, a randomized optimization technique (Chan 1998) can be used in place of parametric search.

This method only incurs a constant factor increase in time complexity while still giving a strongly polynomial algorithm.

Parametric search has been applied in the development of efficient algorithms for optimization problems, particularly in computational geometry (Agarwal, Sharir & Toledo 1994).