Plotting algorithms for the Mandelbrot set

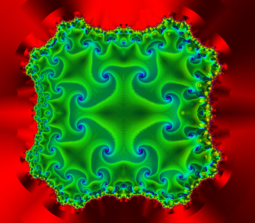

In both the unoptimized and optimized escape time algorithms, the x and y locations of each point are used as starting values in a repeating, or iterating calculation (described in detail below).

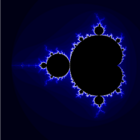

For starting values very close to but not in the set, it may take hundreds or thousands of iterations to escape.

More computationally intensive rendering variations include the Buddhabrot method, which finds escaping points and plots their iterated coordinates.

Otherwise, we keep iterating up to a fixed number of steps, after which we decide that our parameter is "probably" in the Mandelbrot set, or at least very close to it, and color the pixel black.

One practical way, without slowing down calculations, is to use the number of executed iterations as an entry to a palette initialized at startup.

It is common to check the magnitude of z after every iteration, but there is another method we can use that can converge faster and reveal structure within julia sets.

This method will equally distribute colors to the same overall area, and, importantly, is independent of the maximum number of iterations chosen.

The first step of the second pass is to create an array of size n, which is the maximum iteration count: NumIterationsPerPixel.

The array index represents the number of pixels that reached that iteration count before bailout.

However, it creates bands of color, which, as a type of aliasing, can detract from an image's aesthetic value.

This function is given by where zn is the value after n iterations and P is the power for which z is raised to in the Mandelbrot set equation (zn+1 = znP + c, P is generally 2).

HSV Coloring can be accomplished by mapping iter count from [0,max_iter) to [0,360), taking it to the power of 1.5, and then modulo 360.

We can then simply take the exponentially mapped iter count into the value and return This method applies to HSL as well, except we pass a saturation of 50% instead.

One of the most perceptually uniform coloring methods involves passing in the processed iter count into LCH.

If we utilize the exponentially mapped and cyclic method above, we can take the result of that into the Luma and Chroma channels.

This can be achieved with a little trick based on the change in in-gamut colors relative to luma and chroma.

[4][5] The proof of the connectedness of the Mandelbrot set in fact gives a formula for the uniformizing map of the complement of

By the Koebe quarter theorem, one can then estimate the distance between the midpoint of our pixel and the Mandelbrot set up to a factor of 4.

In other words, provided that the maximal number of iterations is sufficiently high, one obtains a picture of the Mandelbrot set with the following properties: The upper bound b for the distance estimate of a pixel c (a complex number) from the Mandelbrot set is given by[6][7][8] where The idea behind this formula is simple: When the equipotential lines for the potential function

From a mathematician's point of view, this formula only works in limit where n goes to infinity, but very reasonable estimates can be found with just a few additional iterations after the main loop exits.

For example, within the while loop of the pseudocode above, make the following modifications: The above code stores away a new x and y value on every 20th iteration, thus it can detect periods that are up to 20 points long.

The horizontal symmetry of the Mandelbrot set allows for portions of the rendering process to be skipped upon the presence of the real axis in the final image.

Escape-time rendering of Mandelbrot and Julia sets lends itself extremely well to parallel processing.

)[16] Here is a short video showing the Mandelbrot set being rendered using multithreading and symmetry, but without boundary following: Finally, here is a video showing the same Mandelbrot set image being rendered using multithreading, symmetry, and boundary following:

Given as the iteration, and a small epsilon and delta, it is the case that or so if one defines one can calculate a single point (e.g. the center of an image) using high-precision arithmetic (z), giving a reference orbit, and then compute many points around it in terms of various initial offsets delta plus the above iteration for epsilon, where epsilon-zero is set to 0.

For most iterations, epsilon does not need more than 16 significant figures, and consequently hardware floating-point may be used to get a mostly accurate image.

Further, it is possible to approximate the starting values for the low-precision points with a truncated Taylor series, which often enables a significant amount of iterations to be skipped.

[18] Renderers implementing these techniques are publicly available and offer speedups for highly magnified images by around two orders of magnitude.

series, then there exists a relation between how many iterations can be achieved in the time it takes to use BigNum software to compute a given

If the difference between the bounds is greater than the number of iterations, it is possible to perform binary search using BigNum software, successively halving the gap until it becomes more time efficient to find the escape value using floating point hardware.