Probabilistic classification

"Hard" classification can then be done using the optimal decision rule[2]: 39–40 or, in English, the predicted class is that which has the highest probability.

Some classification models, such as naive Bayes, logistic regression and multilayer perceptrons (when trained under an appropriate loss function) are naturally probabilistic.

Other models such as support vector machines are not, but methods exist to turn them into probabilistic classifiers.

[2]: 43 Not all classification models are naturally probabilistic, and some that are, notably naive Bayes classifiers, decision trees and boosting methods, produce distorted class probability distributions.

[3] In the case of decision trees, where Pr(y|x) is the proportion of training samples with label y in the leaf where x ends up, these distortions come about because learning algorithms such as C4.5 or CART explicitly aim to produce homogeneous leaves (giving probabilities close to zero or one, and thus high bias) while using few samples to estimate the relevant proportion (high variance).

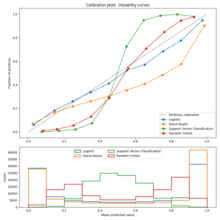

[3][5] A calibration plot shows the proportion of items in each class for bands of predicted probability or score (such as a distorted probability distribution or the "signed distance to the hyperplane" in a support vector machine).

In this case one can use a method to turn these scores into properly calibrated class membership probabilities.

For the binary case, a common approach is to apply Platt scaling, which learns a logistic regression model on the scores.

[8] Commonly used evaluation metrics that compare the predicted probability to observed outcomes include log loss, Brier score, and a variety of calibration errors.

Calibration errors metrics aim to quantify the extent to which a probabilistic classifier's outputs are well-calibrated.

[10] More recent works propose variants to ECE that address limitations of the ECE metric that may arise when classifier scores concentrate on narrow subset of the [0,1], including the Adaptive Calibration Error (ACE) [11] and Test-based Calibration Error (TCE).