Boosting (machine learning)

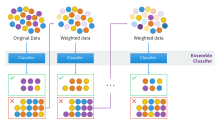

In machine learning (ML), boosting is an ensemble metaheuristic for primarily reducing bias (as opposed to variance).

[5] This has had significant ramifications in machine learning and statistics, most notably leading to the development of boosting.

Freund and Schapire's arcing (Adapt[at]ive Resampling and Combining),[7] as a general technique, is more or less synonymous with boosting.

Schapire and Freund then developed AdaBoost, an adaptive boosting algorithm that won the prestigious Gödel Prize.

[9] The main variation between many boosting algorithms is their method of weighting training data points and hypotheses.

It is often the basis of introductory coverage of boosting in university machine learning courses.

Simple classifiers built based on some image feature of the object tend to be weak in categorization performance.

There are many ways to represent a category of objects, e.g. from shape analysis, bag of words models, or local descriptors such as SIFT, etc.

This is due to high intra class variability and the need for generalization across variations of objects within the same category.

The general algorithm is as follows: After boosting, a classifier constructed from 200 features could yield a 95% detection rate under a

[15] Another application of boosting for binary categorization is a system that detects pedestrians using patterns of motion and appearance.

Also, for a given performance level, the total number of features required (and therefore the run time cost of the classifier) for the feature sharing detectors, is observed to scale approximately logarithmically with the number of class, i.e., slower than linear growth in the non-sharing case.

Similar results are shown in the paper "Incremental learning of object detectors using a visual shape alphabet", yet the authors used AdaBoost for boosting.

Convex algorithms, such as AdaBoost and LogitBoost, can be "defeated" by random noise such that they can't learn basic and learnable combinations of weak hypotheses.