Programming paradigm

Other paradigms are about the way code is organized, such as grouping into units that include both state and behavior.

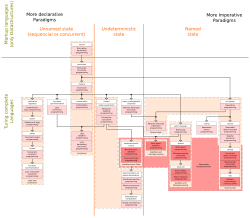

Languages categorized as imperative paradigm have two main features: they state the order in which operations occur, with constructs that explicitly control that order, and they allow side effects, in which state can be modified at one point in time, within one unit of code, and then later read at a different point in time inside a different unit of code.

In contrast, languages in the declarative paradigm do not state the order in which to execute operations.

Instead, they supply a number of available operations in the system, along with the conditions under which each is allowed to execute.

Partly for this reason, new paradigms are often regarded as doctrinaire or overly rigid by those accustomed to older ones.

As a consequence, no one parallel programming language maps well to all computation problems.

An early approach consciously identified as such is structured programming, advocated since the mid 1960s.

Assembly language introduced mnemonics for machine instructions and memory addresses.

In the 1960s, assembly languages were developed to support library COPY and quite sophisticated conditional macro generation and preprocessing abilities, CALL to subroutine, external variables and common sections (globals), enabling significant code re-use and isolation from hardware specifics via the use of logical operators such as READ/WRITE/GET/PUT.

The efficacy and efficiency of such a program is therefore highly dependent on the programmer's skill.

Thus, differing programming paradigms can be seen rather like motivational memes of their advocates, rather than necessarily representing progress from one level to the next.

[citation needed] Precise comparisons of competing paradigms' efficacy are frequently made more difficult because of new and differing terminology applied to similar entities and processes together with numerous implementation distinctions across languages.

The program is structured as a set of properties to find in the expected result, not as a procedure to follow.

Given a database or a set of rules, the computer tries to find a solution matching all the desired properties.

Functional languages discourage changes in the value of variables through assignment, making a great deal of use of recursion instead.

The logic programming paradigm views computation as automated reasoning over a body of knowledge.

Facts about the problem domain are expressed as logic formulas, and programs are executed by applying inference rules over them until an answer to the problem is found, or the set of formulas is proved inconsistent.

[4] Programs can thus effectively modify themselves, and appear to "learn", making them suited for applications such as artificial intelligence, expert systems, natural-language processing and computer games.