Quantile regression

[There is also a method for predicting the conditional geometric mean of the response variable, [1].]

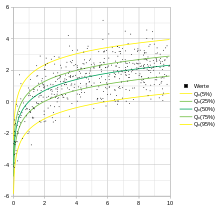

Different measures of central tendency and statistical dispersion can be used to more comprehensively analyze the relationship between variables.

The need for and success of quantile regression in ecology has been attributed to the complexity of interactions between different factors leading to data with unequal variation of one variable for different ranges of another variable.

[4][5] The idea of estimating a median regression slope, a major theorem about minimizing sum of the absolute deviances and a geometrical algorithm for constructing median regression was proposed in 1760 by Ruđer Josip Bošković, a Jesuit Catholic priest from Dubrovnik.

[2]: 4 [6] He was interested in the ellipticity of the earth, building on Isaac Newton's suggestion that its rotation could cause it to bulge at the equator with a corresponding flattening at the poles.

[7] He finally produced the first geometric procedure for determining the equator of a rotating planet from three observations of a surface feature.

More importantly for quantile regression, he was able to develop the first evidence of the least absolute criterion and preceded the least squares introduced by Legendre in 1805 by fifty years.

[8] Other thinkers began building upon Bošković's idea such as Pierre-Simon Laplace, who developed the so-called "methode de situation."

This led to Francis Edgeworth's plural median[9] - a geometric approach to median regression - and is recognized as the precursor of the simplex method.

[8] The works of Bošković, Laplace, and Edgeworth were recognized as a prelude to Roger Koenker's contributions to quantile regression.

Median regression computations for larger data sets are quite tedious compared to the least squares method, for which reason it has historically generated a lack of popularity among statisticians, until the widespread adoption of computers in the latter part of the 20th century.

be a real-valued random variable with cumulative distribution function

A specific quantile can be found by minimizing the expected loss of

5–6): This can be shown by computing the derivative of the expected loss with respect to

via an application of the Leibniz integral rule, setting it to 0, and letting

Similarly, if we reduce q by 1 unit, the change of expected loss function is negative if and only if q is larger than the median.

The idea behind the minimization is to count the number of points (weighted with the density) that are larger or smaller than q and then move q to a point where q is larger than

to denote the conditional quantile to indicate that it is a random variable.

of the linear model can be interpreted as weighted averages of the derivatives

Because quantile regression does not normally assume a parametric likelihood for the conditional distributions of Y|X, the Bayesian methods work with a working likelihood.

A convenient choice is the asymmetric Laplacian likelihood,[14] because the mode of the resulting posterior under a flat prior is the usual quantile regression estimates.

Yang, Wang and He[15] provided a posterior variance adjustment for valid inference.

A switch from the squared error to the tilted absolute value loss function (a.k.a.

It means that we can apply all neural network and deep learning algorithms to quantile regression,[18][19] which is then referred to as nonparametric quantile regression.

[20] Tree-based learning algorithms are also available for quantile regression (see, e.g., Quantile Regression Forests,[21] as a simple generalization of Random Forests).

If the response variable is subject to censoring, the conditional mean is not identifiable without additional distributional assumptions, but the conditional quantile is often identifiable.

This is the censored quantile regression model: estimated values can be obtained without making any distributional assumptions, but at the cost of computational difficulty,[24] some of which can be avoided by using a simple three step censored quantile regression procedure as an approximation.

Censored quantile regression has close links to survival analysis.

The quantile regression loss needs to be adapted in the presence of heteroscedastic errors in order to be efficient.

[26] Numerous statistical software packages include implementations of quantile regression: