Random forest

[1][2] Random forests correct for decision trees' habit of overfitting to their training set.

[3]: 587–588 The first algorithm for random decision forests was created in 1995 by Tin Kam Ho[1] using the random subspace method,[2] which, in Ho's formulation, is a way to implement the "stochastic discrimination" approach to classification proposed by Eugene Kleinberg.

[4][5][6] An extension of the algorithm was developed by Leo Breiman[7] and Adele Cutler,[8] who registered[9] "Random Forests" as a trademark in 2006 (as of 2019[update], owned by Minitab, Inc.).

[10] The extension combines Breiman's "bagging" idea and random selection of features, introduced first by Ho[1] and later independently by Amit and Geman[11] in order to construct a collection of decision trees with controlled variance.

The general method of random decision forests was first proposed by Salzberg and Heath in 1993,[12] with a method that used a randomized decision tree algorithm to create multiple trees and then combine them using majority voting.

A subsequent work along the same lines[2] concluded that other splitting methods behave similarly, as long as they are randomly forced to be insensitive to some feature dimensions.

The explanation of the forest method's resistance to overtraining can be found in Kleinberg's theory of stochastic discrimination.

[4][5][6] The early development of Breiman's notion of random forests was influenced by the work of Amit and Geman[11] who introduced the idea of searching over a random subset of the available decisions when splitting a node, in the context of growing a single tree.

[7] This paper describes a method of building a forest of uncorrelated trees using a CART like procedure, combined with randomized node optimization and bagging.

[3]: 587–588 This comes at the expense of a small increase in the bias and some loss of interpretability, but generally greatly boosts the performance in the final model.

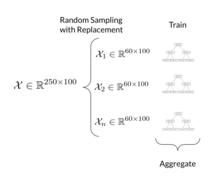

The training algorithm for random forests applies the general technique of bootstrap aggregating, or bagging, to tree learners.

Typically, a few hundred to several thousand trees are used, depending on the size and nature of the training set.

An analysis of how bagging and random subspace projection contribute to accuracy gains under different conditions is given by Ho.

[3]: 592 For regression problems the inventors recommend p/3 (rounded down) with a minimum node size of 5 as the default.

As with ordinary random forests, they are an ensemble of individual trees, but there are two main differences: (1) each tree is trained using the whole learning sample (rather than a bootstrap sample), and (2) the top-down splitting is randomized: for each feature under consideration, a number of random cut-points are selected, instead of computing the locally optimal cut-point (based on, e.g., information gain or the Gini impurity).

The values are chosen from a uniform distribution within the feature's empirical range (in the tree's training set).

Some methods for accomplishing this are: Random forests can be used to rank the importance of variables in a regression or classification problem in a natural way.

The importance for the feature is computed by averaging the difference in out-of-bag error before and after the permutation over all trees.

[31] It is described in the book Classification and Regression Trees by Leo Breiman[32] and is the default implementation in sci-kit learn and R. The definition is:

The sci-kit learn default implementation can report misleading feature importance:[30] A relationship between random forests and the k-nearest neighbor algorithm (k-NN) was pointed out by Lin and Jeon in 2002.

Lin and Jeon show that the shape of the neighborhood used by a random forest adapts to the local importance of each feature.

[34] As part of their construction, random forest predictors naturally lead to a dissimilarity measure among observations.

Random forest dissimilarity has been used in a variety of applications, e.g. to find clusters of patients based on tissue marker data.

[36] Instead of decision trees, linear models have been proposed and evaluated as base estimators in random forests, in particular multinomial logistic regression and naive Bayes classifiers.

By slightly modifying their definition, random forests can be rewritten as kernel methods, which are more interpretable and easier to analyze.

[41] Leo Breiman[42] was the first person to notice the link between random forest and kernel methods.

random vectors in the tree construction are equivalent to a kernel acting on the true margin.

In order to improve the random forest methods and compensate the misestimation, Scornet[41] defined KeRF by

Predictions given by KeRF and random forests are close if the number of points in each cell is controlled: Assume that there exist sequences

Their estimates are close if the number of observations in each cell is bounded: Assume that there exist sequences