Sample maximum and minimum

The minimum and the maximum value are the first and last order statistics (often denoted X(1) and X(n) respectively, for a sample size of n).

However, the sample maximum and minimum need not be outliers, if they are not unusually far from other observations.

The sample maximum and minimum are the least robust statistics: they are maximally sensitive to outliers.

This can either be an advantage or a drawback: if extreme values are real (not measurement errors), and of real consequence, as in applications of extreme value theory such as building dikes or financial loss, then outliers (as reflected in sample extrema) are important.

On the other hand, if outliers have little or no impact on actual outcomes, then using non-robust statistics such as the sample extrema simply clouds the statistics, and robust alternatives should be used, such as other quantiles: the 10th and 90th percentiles (first and last decile) are more robust alternatives.

In addition to being a component of every statistic that uses all elements of the sample, the sample extrema are important parts of the range, a measure of dispersion, and mid-range, a measure of location.

For a sample set, the maximum function is non-smooth and thus non-differentiable.

For optimization problems that occur in statistics it often needs to be approximated by a smooth function that is close to the maximum of the set.

The sample maximum and minimum provide a non-parametric prediction interval: in a sample from a population, or more generally an exchangeable sequence of random variables, each observation is equally likely to be the maximum or minimum.

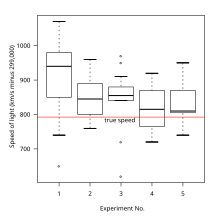

Due to their sensitivity to outliers, the sample extrema cannot reliably be used as estimators unless data is clean – robust alternatives include the first and last deciles.

However, with clean data or in theoretical settings, they can sometimes prove very good estimators, particularly for platykurtic distributions, where for small data sets the mid-range is the most efficient estimator.

For sampling without replacement from a uniform distribution with one or two unknown endpoints (so

If both endpoints are unknown, then the sample range is a biased estimator for the population range, but correcting as for maximum above yields the UMVU estimator.

The reason the sample extrema are sufficient statistics is that the conditional distribution of the non-extreme samples is just the distribution for the uniform interval between the sample maximum and minimum – once the endpoints are fixed, the values of the interior points add no additional information.

Thus if the sample extrema are 6 sigmas from the mean, one has a significant failure of normality.

These tests of normality can be applied if one faces kurtosis risk, for instance.

Sample extrema play two main roles in extreme value theory: However, caution must be used in using sample extrema as guidelines: in heavy-tailed distributions or for non-stationary processes, extreme events can be significantly more extreme than any previously observed event.