Prediction interval

In statistical inference, specifically predictive inference, a prediction interval is an estimate of an interval in which a future observation will fall, with a certain probability, given what has already been observed.

However, the prediction interval for the next roll will approximately range from 1 to 6, even with any number of samples seen so far.

If one makes the parametric assumption that the underlying distribution is a normal distribution, and has a sample set {X1, ..., Xn}, then confidence intervals and credible intervals may be used to estimate the population mean μ and population standard deviation σ of the underlying population, while prediction intervals may be used to estimate the value of the next sample variable, Xn+1.

The concept of prediction intervals need not be restricted to inference about a single future sample value but can be extended to more complicated cases.

For example, in the context of river flooding where analyses are often based on annual values of the largest flow within the year, there may be interest in making inferences about the largest flood likely to be experienced within the next 50 years.

Since prediction intervals are only concerned with past and future observations, rather than unobservable population parameters, they are advocated as a better method than confidence intervals by some statisticians, such as Seymour Geisser,[citation needed] following the focus on observables by Bruno de Finetti.

Such a pivotal quantity, depending only on observables, is called an ancillary statistic.

[2] The usual method of constructing pivotal quantities is to take the difference of two variables that depend on location, so that location cancels out, and then take the ratio of two variables that depend on scale, so that scale cancels out.

The most familiar pivotal quantity is the Student's t-statistic, which can be derived by this method and is used in the sequel.

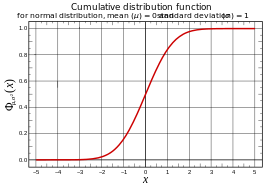

A prediction interval [ℓ,u] for a future observation X in a normal distribution N(μ,σ2) with known mean and variance may be calculated from where

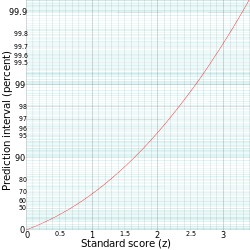

Hence or with z the quantile in the standard normal distribution for which: or equivalently; The prediction interval is conventionally written as: For example, to calculate the 95% prediction interval for a normal distribution with a mean (μ) of 5 and a standard deviation (σ) of 1, then z is approximately 2.

Taking the difference of these cancels the μ and yields a normal distribution of variance

This is a predictive confidence interval in the sense that if one uses a quantile range of 100p%, then on repeated applications of this computation, the future observation

Conversely, given a normal distribution with known mean μ but unknown variance

and the sample standard deviation s cancels the σ, yielding a Student's t-distribution with n – 1 degrees of freedom (see its derivation): Solving for

and known mean μ, as it uses the t-distribution instead of the normal distribution, hence yields wider intervals.

falling in a given interval is then: where Ta is the 100((1 − p)/2)th percentile of Student's t-distribution with n − 1 degrees of freedom.

The residual bootstrap method can be used for constructing non-parametric prediction intervals.

Let us look at the special case of using the minimum and maximum as boundaries for a prediction interval: If one has a sample of identical random variables {X1, ..., Xn}, then the probability that the next observation Xn+1 will be the largest is 1/(n + 1), since all observations have equal probability of being the maximum.

Thus, denoting the sample maximum and minimum by M and m, this yields an (n − 1)/(n + 1) prediction interval of [m, M].

Formally, this applies not just to sampling from a population, but to any exchangeable sequence of random variables, not necessarily independent or identically distributed.

In the formula for the predictive confidence interval no mention is made of the unobservable parameters μ and σ of population mean and standard deviation – the observed sample statistics

When considering prediction intervals, rather than using sample statistics as estimators of population parameters and applying confidence intervals to these estimates, one considers "the next sample"

Suppose the data is being modeled by a straight line (simple linear regression): where

for the parameters, such as from a ordinary least squares, the predicted response value yd for a given explanatory value xd is (the point on the regression line), while the actual response would be The point estimate

is called the mean response, and is an estimate of the expected value of yd,

A prediction interval instead gives an interval in which one expects yd to fall; this is not necessary if the actual parameters α and β are known (together with the error term εi), but if one is estimating from a sample, then one may use the standard error of the estimates for the intercept and slope (

In regression, Faraway (2002, p. 39) makes a distinction between intervals for predictions of the mean response vs. for predictions of observed response—affecting essentially the inclusion or not of the unity term within the square root in the expansion factors above; for details, see Faraway (2002).

In theoretical work, credible intervals are not often calculated for the prediction of future events, but for inference of parameters – i.e., credible intervals of a parameter, not for the outcomes of the variable itself.

Prediction intervals are commonly used as definitions of reference ranges, such as reference ranges for blood tests to give an idea of whether a blood test is normal or not.