Stimulus modality

The type and location of the sensory receptor activated by the stimulus plays the primary role in coding the sensation.

Moreover, several labs using invertebrate model organisms will provide invaluable information to the community as these are more easily studied and are considered to have decentralized nervous systems.

Integration effect is applied when the brain detects weak unimodal signals and combines them to create a multimodal perception for the mammal.

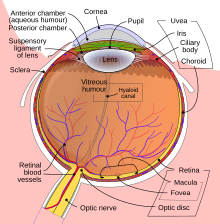

The stimulus modality for vision is light; the human eye is able to access only a limited section of the electromagnetic spectrum, between 380 and 760 nanometres.

When a particle of light hits the photoreceptors of the eye, the two molecules come apart from each other and a chain of chemical reactions occurs.

The chemical reaction begins with the photoreceptor sending a message to a neuron called the bipolar cell through the use of an action potential, or nerve impulse.

[5] The eye is able to detect a visual stimulus when the photons (light packets) cause a photopigment molecule, primarily rhodopsin, to come apart.

When entering a dark room after being in a well lit area, the eyes require time for a good quantity of rhodopsin to regenerate.

[5] Humans are able to see an array of colours because light in the visible spectrum is made up of different wavelengths (from 380 to 760 nm).

These slides were preceded by slides that caused either positive emotional arousal (i.e. bridal couple, a child with a Mickey Mouse doll) or negative emotional arousal (i.e. a bucket of snakes, a face on fire) for a period 13 milliseconds that participants consciously perceived as a sudden flash of light.

The experiment found that during the questionnaire round, participants were more likely to assign positive personality traits to those in the pictures that were preceded by the positive subliminal images and negative personality traits to those in the pictures that were preceded by the negative subliminal images.

These conditions occur when the light rays entering the eye are unable to converge on a single spot on the retina.

In healthy normal vision, an individual should be able to partially perceive objects to the left or right of their field of view using both eyes at one time.

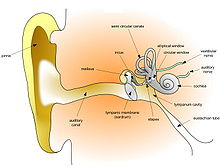

Humans, on average, are able to detect sounds as pitched when they contain periodic or quasi-periodic variations that fall between the range of 30 to 20000 hertz.

[5] The human ear is able to detect differences in pitch through the movement of auditory hair cells found on the basilar membrane.

High frequency sounds will stimulate the auditory hair cells at the base of the basilar membrane while medium frequency sounds cause vibrations of auditory hair cells located at the middle of the basilar membrane.

[5] When a louder sound is heard, more hair cells are stimulated and the intensity of firing of axons in the cochlear nerve is increased.

The number of hair cells that are stimulated is thought to communicate loudness in low pitch frequencies.

When a complex sound is heard, it causes different parts in the basilar membrane to become simultaneously stimulated and flex.

[5] A number of studies have shown that a human fetus will respond to sound stimuli coming from the outside world.

[8][9] In a series of 214 tests conducted on 7 pregnant women, a reliable increase in fetal movement was detected in the minute directly following the application of a sound stimulus to the abdomen of the mother with a frequency of 120 per second.

Receptor cells disseminate onto different neurons and convey the message of a particular taste in a single medullar nucleus.

The perception begins when thermal stimuli from a homeostatic set-point excite temperature specific sensory nerves in the skin.

Then specific cutaneous cold and warm receptors conduct units that exhibit a discharge at constant skin temperature.

To make sense of the stimuli, an organism will undergo active exploration, or haptic perception, by moving their hands or other areas with environment-skin contact.

Direct and indirect send different types messages to the brain, but both provide information regarding roughness, hardness, stickiness, and warmth.

Tactile perception is achieved through the response of mechanoreceptors (cutaneous receptors) in the skin that detect physical stimuli.

Inside the nasal chambers is the neuroepithelium, a lining deep within the nostrils that contains the receptors responsible for detecting molecules that are small enough to smell.

For example, olfactory detection thresholds can change due to molecules with differing lengths of carbon chains.

Additionally, women generally have lower olfactory thresholds than men, and this effect is magnified during a woman's ovulatory period.