Event camera

Instead, each pixel inside an event camera operates independently and asynchronously, reporting changes in brightness as they occur, and staying silent otherwise.

Events may also contain the polarity (increase or decrease) of a brightness change, or an instantaneous measurement of the illumination level,[5] depending on the specific sensor model.

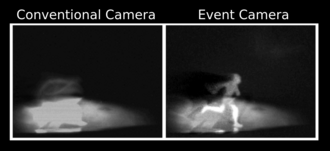

Pixel independence allows these cameras to cope with scenes with brightly and dimly lit regions without having to average across them.

[7] It is important to note that, while the camera reports events with microsecond resolution, the actual temporal resolution (or, alternatively, the bandwidth for sensing) is on the order of tens of microseconds to a few miliseconds, depending on signal contrast, lighting conditions, and sensor design.

[17] These capacitors are distinct from photocapacitors, which are used to store solar energy,[18] and are instead designed to change capacitance under illumination.

[30] Segmentation and detection of moving objects viewed by an event camera can seem to be a trivial task, as it is done by the sensor on-chip.

approaches to solving this problem include the incorporation of motion-compensation models[33][34] and traditional clustering algorithms.

considering infrared and other event cameras because of their lower power consumption and reduced heat generation.

These applications include the aforementioned autonomous systems, but also space imaging, security, defense, and industrial monitoring.