Optical flow

Optical flow or optic flow is the pattern of apparent motion of objects, surfaces, and edges in a visual scene caused by the relative motion between an observer and a scene.

[1][2] Optical flow can also be defined as the distribution of apparent velocities of movement of brightness pattern in an image.

[3] The concept of optical flow was introduced by the American psychologist James J. Gibson in the 1940s to describe the visual stimulus provided to animals moving through the world.

[4] Gibson stressed the importance of optic flow for affordance perception, the ability to discern possibilities for action within the environment.

Followers of Gibson and his ecological approach to psychology have further demonstrated the role of the optical flow stimulus for the perception of movement by the observer in the world; perception of the shape, distance and movement of objects in the world; and the control of locomotion.

[5] The term optical flow is also used by roboticists, encompassing related techniques from image processing and control of navigation including motion detection, object segmentation, time-to-contact information, focus of expansion calculations, luminance, motion compensated encoding, and stereo disparity measurement.

[9] To formalise this intuitive assumption, consider two consecutive frames from a video sequence, with intensity

One can combine both of these constraints to formulate estimating optical flow as an optimization problem, where the goal is to minimize the cost function of the form, where

To address this issue, one can use a variational approach and linearise the brightness constancy constraint using a first order Taylor series approximation.

Specifically, the brightness constancy constraint is approximated as, For convenience, the derivatives of the image,

Doing so, allows one to rewrite the linearised brightness constancy constraint as,[11] The optimization problem can now be rewritten as For the choice of

Since the image data is made up of discrete pixels, these equations are discretised.

[12] An alternate approach is to discretize the optimisation problem and then perform a search of the possible

[14] This search is often performed using Max-flow min-cut theorem algorithms, linear programming or belief propagation methods.

In formulating optical flow estimation in this way, one makes the assumption that the motion field in each region be fully characterised by a set of parameters.

Therefore, the goal of a parametric model is to estimate the motion parameters that minimise a loss function which can be written as, where

The Lucas-Kanade method uses the original brightness constancy constrain as the data cost term and selects

Since 2015, when FlowNet[16] was proposed, learning based models have been applied to optical flow and have gained prominence.

Initially, these approaches were based on Convolutional Neural Networks arranged in a U-Net architecture.

[17] Motion estimation and video compression have developed as a major aspect of optical flow research.

While the optical flow field is superficially similar to a dense motion field derived from the techniques of motion estimation, optical flow is the study of not only the determination of the optical flow field itself, but also of its use in estimating the three-dimensional nature and structure of the scene, as well as the 3D motion of objects and the observer relative to the scene, most of them using the image Jacobian.

[18] Optical flow was used by robotics researchers in many areas such as: object detection and tracking, image dominant plane extraction, movement detection, robot navigation and visual odometry.

[6] Optical flow information has been recognized as being useful for controlling micro air vehicles.

Since awareness of motion and the generation of mental maps of the structure of our environment are critical components of animal (and human) vision, the conversion of this innate ability to a computer capability is similarly crucial in the field of machine vision.

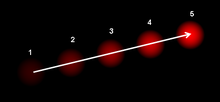

[20] Consider a five-frame clip of a ball moving from the bottom left of a field of vision, to the top right.

One configuration is an image sensor chip connected to a processor programmed to run an optical flow algorithm.

Another configuration uses a vision chip, which is an integrated circuit having both the image sensor and the processor on the same die, allowing for a compact implementation.

In some cases the processing circuitry may be implemented using analog or mixed-signal circuits to enable fast optical flow computation using minimal current consumption.

[23] Such circuits may draw inspiration from biological neural circuitry that similarly responds to optical flow.

The use of optical flow sensors in unmanned aerial vehicles (UAVs), for stability and obstacle avoidance, is also an area of current research.