Priority queue

A priority queue must at least support the following operations: In addition, peek (in this context often called find-max or find-min), which returns the highest-priority element but does not modify the queue, is very frequently implemented, and nearly always executes in O(1) time.

(O(1) pull time) To improve performance, priority queues are typically based on a heap, giving O(log n) performance for inserts and removals, and O(n) to build the heap initially from a set of n elements.

[1] Alternatively, when a self-balancing binary search tree is used, insertion and removal also take O(log n) time, although building trees from existing sequences of elements takes O(n log n) time; this is typical where one might already have access to these data structures, such as with third-party or standard libraries.

From a space-complexity standpoint, using self-balancing binary search tree with linked list takes more storage, since it requires to store extra references to other nodes.

For applications that do many "peek" operations for every "extract-min" operation, the time complexity for peek actions can be reduced to O(1) in all tree and heap implementations by caching the highest priority element after every insertion and removal.

This is actually the procedure used by several sorting algorithms, once the layer of abstraction provided by the priority queue is removed.

However, it does not specify how two elements with same priority should be served, and indeed, common implementations will not return them according to their order in the queue.

It implements a max-priority-queue, and has three parameters: a comparison object for sorting such as a function object (defaults to less

Unlike actual STL containers, it does not allow iteration of its elements (it strictly adheres to its abstract data type definition).

STL also has utility functions for manipulating another random-access container as a binary max-heap.

Python's heapq module implements a binary min-heap on top of a list.

Java's library contains a PriorityQueue class, which implements a min-priority-queue as a binary heap.

.NET's library contains a PriorityQueue class, which implements an array-backed, quaternary min-heap.

Go's library contains a container/heap module, which implements a min-heap on top of any compatible data structure.

Apple's Core Foundation framework contains a CFBinaryHeap structure, which implements a min-heap.

Priority queuing can be used to manage limited resources such as bandwidth on a transmission line from a network router.

Many modern protocols for local area networks also include the concept of priority queues at the media access control (MAC) sub-layer to ensure that high-priority applications (such as VoIP or IPTV) experience lower latency than other applications which can be served with best-effort service.

Examples include IEEE 802.11e (an amendment to IEEE 802.11 which provides quality of service) and ITU-T G.hn (a standard for high-speed local area network using existing home wiring (power lines, phone lines and coaxial cables).

Usually a limitation (policer) is set to limit the bandwidth that traffic from the highest priority queue can take, in order to prevent high priority packets from choking off all other traffic.

Once a node is visited, if it comes up in the heap again (having had a lower priority number associated with it earlier), it is popped-off and ignored.

If memory limitations make best-first search impractical, variants like the SMA* algorithm can be used instead, with a double-ended priority queue to allow removal of low-priority items.

The Real-time Optimally Adapting Meshes (ROAM) algorithm computes a dynamically changing triangulation of a terrain.

Using min heap priority queue in Prim's algorithm to find the minimum spanning tree of a connected and undirected graph, one can achieve a good running time.

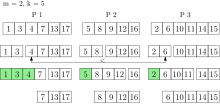

One possible change is to allow the concurrent access of multiple processors to the same priority queue.

[25][26] In addition, an atomic synchronization primitive, CAS, is used to make the skip list lock-free.

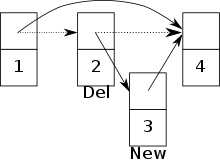

If the concurrent access to a priority queue is allowed, conflicts may arise between two processes.

For instance, k_decrease-key can be done by first applying difference and then union, which first deletes the elements and then inserts them back with the updated keys.

All these operations are highly parallel, and the theoretical and practical efficiency can be found in related research papers.

This saves moving elements back and forth all the time between the result set and the local queues.

So using k-element operations destroys the label setting property of Dijkstra's algorithm.