Theoretical computer science

With mounting biological data supporting this hypothesis with some modification, the fields of neural networks and parallel distributed processing were established.

In 1971, Stephen Cook and, working independently, Leonid Levin, proved that there exist practically relevant problems that are NP-complete – a landmark result in computational complexity theory.

[2] Modern theoretical computer science research is based on these basic developments, but includes many other mathematical and interdisciplinary problems that have been posed, as shown below: An algorithm is a step-by-step procedure for calculations.

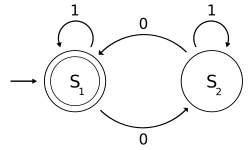

Automata Theory is the study of self-operating virtual machines to help in the logical understanding of input and output process, without or with intermediate stage(s) of computation (or any function/process).

Codes are studied by various scientific disciplines – such as information theory, electrical engineering, mathematics, and computer science – for the purpose of designing efficient and reliable data transmission methods.

The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying the amount of resources needed to solve them, such as time and storage.

Other important applications of computational geometry include robotics (motion planning and visibility problems), geographic information systems (GIS) (geometrical location and search, route planning), integrated circuit design (IC geometry design and verification), computer-aided engineering (CAE) (mesh generation), computer vision (3D reconstruction).

The goal of the supervised learning algorithm is to optimize some measure of performance such as minimizing the number of mistakes made on new samples.

Cryptography is the practice and study of techniques for secure communication in the presence of third parties (called adversaries).

Formal methods are a particular kind of mathematics based techniques for the specification, development and verification of software and hardware systems.

[18] Formal methods are best described as the application of a fairly broad variety of theoretical computer science fundamentals, in particular logic calculi, formal languages, automata theory, and program semantics, but also type systems and algebraic data types to problems in software and hardware specification and verification.

Since its inception it has broadened to find applications in many other areas, including statistical inference, natural language processing, cryptography, neurobiology,[20] the evolution[21] and function[22] of molecular codes, model selection in statistics,[23] thermal physics,[24] quantum computing, linguistics, plagiarism detection,[25] pattern recognition, anomaly detection and other forms of data analysis.

The field is at the intersection of mathematics, statistics, computer science, physics, neurobiology, and electrical engineering.

Its impact has been crucial to the success of the Voyager missions to deep space, the invention of the compact disc, the feasibility of mobile phones, the development of the Internet, the study of linguistics and of human perception, the understanding of black holes, and numerous other fields.

[27] Such algorithms operate by building a model based on inputs[28]: 2 and using that to make predictions or decisions, rather than following only explicitly programmed instructions.

It has strong ties to artificial intelligence and optimization, which deliver methods, theory and application domains to the field.

Machine learning is employed in a range of computing tasks where designing and programming explicit, rule-based algorithms is infeasible.

Example applications include spam filtering, optical character recognition (OCR),[29] search engines and computer vision.

Computational paradigms studied by natural computing are abstracted from natural phenomena as diverse as self-replication, the functioning of the brain, Darwinian evolution, group behavior, the immune system, the defining properties of life forms, cell membranes, and morphogenesis.

The Zuse-Fredkin thesis, dating back to the 1960s, states that the entire universe is a huge cellular automaton which continuously updates its rules.

Parallelism has been employed for many years, mainly in high-performance computing, but interest in it has grown lately due to the physical constraints preventing frequency scaling.

[43] Parallel computer programs are more difficult to write than sequential ones,[44] because concurrency introduces several new classes of potential software bugs, of which race conditions are the most common.

It falls within the discipline of theoretical computer science, both depending on and affecting mathematics, software engineering, and linguistics.

It does so by evaluating the meaning of syntactically legal strings defined by a specific programming language, showing the computation involved.

Software applications that perform symbolic calculations are called computer algebra systems, with the term system alluding to the complexity of the main applications that include, at least, a method to represent mathematical data in a computer, a user programming language (usually different from the language used for the implementation), a dedicated memory manager, a user interface for the input/output of mathematical expressions, a large set of routines to perform usual operations, like simplification of expressions, differentiation using chain rule, polynomial factorization, indefinite integration, etc.