Workplace impact of artificial intelligence

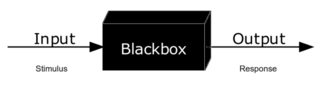

These may arise from machine learning techniques leading to unpredictable behavior and inscrutability in their decision-making, or from cybersecurity and information privacy issues.

Hazard controls include cybersecurity and information privacy measures, communication and transparency with workers about data usage, and limitations on collaborative robots.

From a workplace safety and health perspective, only "weak" or "narrow" AI that is tailored to a specific task is relevant, as there are many examples that are currently in use or expected to come into use in the near future.

[2][4] A large number of tech workers have been laid off starting in 2023;[5] many such job cuts have been attributed to artificial intelligence.

[6] In order for any potential AI health and safety application to be adopted, it requires acceptance by both managers and workers.

[8]: 26–28, 43–45 Alternatively, managers may emphasize increases in economic productivity rather than gains in worker safety and health when implementing AI-based systems.

[11] As an example, call center workers face extensive health and safety risks due to its repetitive and demanding nature and its high rates of micro-surveillance.

[10]: 5–7 Machine learning is used for people analytics to make predictions about worker behavior to assist management decision-making, such as hiring and performance assessment.

[14] For manual material handling workers, predictive analytics and artificial intelligence may be used to reduce musculoskeletal injury.

[7] Wearable sensors may also enable earlier intervention against exposure to toxic substances than is possible with area or breathing zone testing on a periodic basis.

These AI-based tools can manage administrative duties, such as scheduling meetings, sending reminders, processing orders, and organizing travel plans.

This automation can improve workflow efficiency by reducing time spent on repetitive tasks, thus supporting employees to focus on higher-priority responsibilities.

[15] Digital assistants are especially valuable in streamlining customer service workflows, where they can handle basic inquiries, reducing the demand on human employees.

[15] However, there remain challenges in fully integrating these assistants due to concerns over data privacy, accuracy, and organizational readiness.

[19] Many hazards of AI are psychosocial in nature due to its potential to cause changes in work organization, in terms of increasing complexity and interaction between different organizational factors.

[10]: 12–13 In complex human‐machine interactions, some approaches to accident analysis may be biased to safeguard a technological system and its developers by assigning blame to the individual human operator instead.

[8]: 5, 29–30 For cobots, sensor malfunctions or unexpected work environment conditions can lead to unpredictable robot behavior and thus to human–robot collisions.

[7] Communication and transparency with workers about data usage is a control for psychosocial hazards arising from security and privacy issues.

[10]: 12–13 Both applications and hazards arising from AI can be considered as part of existing frameworks for occupational health and safety risk management.

The United States Department of Labor's Occupational Information Network is an example of a database with a detailed taxonomy of skills.