Artificial neuron

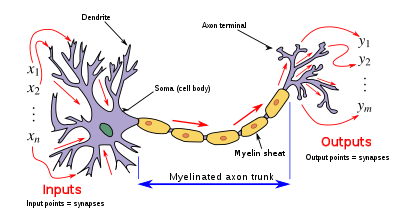

The output is analogous to the axon of a biological neuron, and its value propagates to the input of the next layer, through a synapse.

Its activation function weights are calculated, and its threshold value is predetermined.

In an MCP neural network, all the neurons operate in synchronous discrete time-steps of

Each output can be the input to an arbitrary number of neurons, including itself (i.e., self-loops are possible).

[4] Furnished with an infinite tape, MCP neural networks can simulate any Turing machine.

However a significant performance gap exists between biological and artificial neural networks.

Each time the electrical potential inside the soma reaches a certain threshold, a pulse is transmitted down the axon.

at which an axon fires converts directly into the rate at which neighboring cells get signal ions introduced into them.

It is this conversion that allows computer scientists and mathematicians to simulate biological neural networks using artificial neurons which can output distinct values (often from −1 to 1).

Research has shown that unary coding is used in the neural circuits responsible for birdsong production.

[7][8] The use of unary in biological networks is presumably due to the inherent simplicity of the coding.

[12][13] Low-power biocompatible memristors may enable construction of artificial neurons which function at voltages of biological action potentials and could be used to directly process biosensing signals, for neuromorphic computing and/or direct communication with biological neurons.

[14][15][16] Organic neuromorphic circuits made out of polymers, coated with an ion-rich gel to enable a material to carry an electric charge like real neurons, have been built into a robot, enabling it to learn sensorimotorically within the real world, rather than via simulations or virtually.

[17][18] Moreover, artificial spiking neurons made of soft matter (polymers) can operate in biologically relevant environments and enable the synergetic communication between the artificial and biological domains.

[19][20] The first artificial neuron was the Threshold Logic Unit (TLU), or Linear Threshold Unit,[21] first proposed by Warren McCulloch and Walter Pitts in 1943 in A logical calculus of the ideas immanent in nervous activity.

Initially, only a simple model was considered, with binary inputs and outputs, some restrictions on the possible weights, and a more flexible threshold value.

Researchers also soon realized that cyclic networks, with feedbacks through neurons, could define dynamical systems with memory, but most of the research concentrated (and still does) on strictly feed-forward networks because of the smaller difficulty they present.

One important and pioneering artificial neural network that used the linear threshold function was the perceptron, developed by Frank Rosenblatt.

This model already considered more flexible weight values in the neurons, and was used in machines with adaptive capabilities.

The representation of the threshold values as a bias term was introduced by Bernard Widrow in 1960 – see ADALINE.

In the late 1980s, when research on neural networks regained strength, neurons with more continuous shapes started to be considered.

The possibility of differentiating the activation function allows the direct use of the gradient descent and other optimization algorithms for the adjustment of the weights.

The best known training algorithm called backpropagation has been rediscovered several times but its first development goes back to the work of Paul Werbos.

The "signal" is sent, i.e. the output is set to 1, if the activation meets or exceeds the threshold.

It is specially useful in the last layer of a network, intended for example to perform binary classification of the inputs.

In this case, the output unit is simply the weighted sum of its inputs, plus a bias term.

The reason is that the gradients computed by the backpropagation algorithm tend to diminish towards zero as activations propagate through layers of sigmoidal neurons, making it difficult to optimize neural networks using multiple layers of sigmoidal neurons.

This activation function was first introduced to a dynamical network by Hahnloser et al. in a 2000 paper in Nature[25] with strong biological motivations and mathematical justifications.

[26] It has been demonstrated for the first time in 2011 to enable better training of deeper networks,[27] compared to the widely used activation functions prior to 2011, i.e., the logistic sigmoid (which is inspired by probability theory; see logistic regression) and its more practical[28] counterpart, the hyperbolic tangent.

[29] The following is a simple pseudocode implementation[citation needed] of a single Threshold Logic Unit (TLU) which takes Boolean inputs (true or false), and returns a single Boolean output when activated.