Atmospheric model

It can supplement these equations with parameterizations for turbulent diffusion, radiation, moist processes (clouds and precipitation), heat exchange, soil, vegetation, surface water, the kinematic effects of terrain, and convection.

For specific locations, model output statistics use climate information, output from numerical weather prediction, and current surface weather observations to develop statistical relationships which account for model bias and resolution issues.

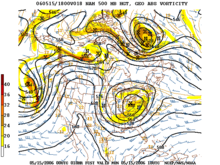

The main assumption made by the thermotropic model is that while the magnitude of the thermal wind may change, its direction does not change with respect to height, and thus the baroclinicity in the atmosphere can be simulated using the 500 mb (15 inHg) and 1,000 mb (30 inHg) geopotential height surfaces and the average thermal wind between them.

In this type of atmosphere, high and low pressure areas are centers of warm and cold temperature anomalies.

[7] The history of numerical weather prediction began in the 1920s through the efforts of Lewis Fry Richardson who utilized procedures developed by Vilhelm Bjerknes.

[13][14] The development of limited area (regional) models facilitated advances in forecasting the tracks of tropical cyclone as well as air quality in the 1970s and 1980s.

[15][16] Because the output of forecast models based on atmospheric dynamics requires corrections near ground level, model output statistics (MOS) were developed in the 1970s and 1980s for individual forecast points (locations).

[17][18] Even with the increasing power of supercomputers, the forecast skill of numerical weather models only extends to about two weeks into the future, since the density and quality of observations—together with the chaotic nature of the partial differential equations used to calculate the forecast—introduce errors which double every five days.

On land, terrain maps available at resolutions down to 1 kilometer (0.6 mi) globally are used to help model atmospheric circulations within regions of rugged topography, in order to better depict features such as downslope winds, mountain waves and related cloudiness that affects incoming solar radiation.

[24] One main source of input is observations from devices (called radiosondes) in weather balloons which rise through the troposphere and well into the stratosphere that measure various atmospheric parameters and transmits them to a fixed receiver.

[27] These observations are irregularly spaced, so they are processed by data assimilation and objective analysis methods, which perform quality control and obtain values at locations usable by the model's mathematical algorithms.

Research projects use reconnaissance aircraft to fly in and around weather systems of interest, such as tropical cyclones.

[32][33] Reconnaissance aircraft are also flown over the open oceans during the cold season into systems which cause significant uncertainty in forecast guidance, or are expected to be of high impact from three to seven days into the future over the downstream continent.

[35] Efforts to involve sea surface temperature in model initialization began in 1972 due to its role in modulating weather in higher latitudes of the Pacific.

[36] A model is a computer program that produces meteorological information for future times at given locations and altitudes.

A typical cumulus cloud has a scale of less than 1 kilometre (0.62 mi), and would require a grid even finer than this to be represented physically by the equations of fluid motion.

More sophisticated schemes add enhancements, recognizing that only some portions of the box might convect and that entrainment and other processes occur.

[47] The formation of large-scale (stratus-type) clouds is more physically based, they form when the relative humidity reaches some prescribed value.

The amount of solar radiation reaching ground level in rugged terrain, or due to variable cloudiness, is parameterized as this process occurs on the molecular scale.

Regional models use finer grid spacing to resolve explicitly smaller-scale meteorological phenomena, since their smaller domain decreases computational demands.

[17] The United States Air Force developed its own set of MOS based upon their dynamical weather model by 1983.

[62] In 1956, Norman Phillips developed a mathematical model that realistically depicted monthly and seasonal patterns in the troposphere.

[66][67][68] In 1986, efforts began to initialize and model soil and vegetation types, resulting in more realistic forecasts.

[70] In 1970, a private company in the U.S. developed the regional Urban Airshed Model (UAM), which was used to forecast the effects of air pollution and acid rain.

In the mid- to late-1970s, the United States Environmental Protection Agency took over the development of the UAM and then used the results from a regional air pollution study to improve it.

[15] Despite the constantly improving dynamical model guidance made possible by increasing computational power, it was not until the 1980s that numerical weather prediction (NWP) showed skill in forecasting the track of tropical cyclones.