Biological neuron model

A mathematically simpler "integrate-and-fire" model significantly simplifies the description of ion channel and membrane potential dynamics (initially studied by Lapique in 1907).

[8][9][10][11] It consists of a set of nonlinear differential equations describing the behavior of ion channels that permeate the cell membrane of the squid giant axon.

If the model receives a below-threshold short current pulse at some time, it will retain that voltage boost forever - until another input later makes it fire.

The model equation is valid for arbitrary time-dependent input until a threshold Vth is reached; thereafter the membrane potential is reset.

Assuming a reset to zero, the firing frequency thus looks like which converges for large input currents to the previous leak-free model with the refractory period.

[18][19] The most significant disadvantage of this model is that it does not contain neuronal adaptation, so that it cannot describe an experimentally measured spike train in response to constant input current.

[20] This disadvantage is removed in generalized integrate-and-fire models that also contain one or several adaptation-variables and are able to predict spike times of cortical neurons under current injection to a high degree of accuracy.

[21][22][23] Neuronal adaptation refers to the fact that even in the presence of a constant current injection into the soma, the intervals between output spikes increase.

[22][23] Recent advances in computational and theoretical fractional calculus lead to a new form of model called Fractional-order leaky integrate-and-fire.

[39][40] This neuron used in SNNs through surrogate gradient creates an adaptive learning rate yielding higher accuracy and faster convergence, and flexible long short-term memory compared to existing counterparts in the literature.

Cortical neurons in experiments are found to respond reliably to time-dependent input, albeit with a small degree of variations between one trial and the next if the same stimulus is repeated.

For discrete-time implementations with time step dt the voltage updates are[27] where y is drawn from a Gaussian distribution with zero mean unit variance.

[46] Integrate-and-fire models with output noise can be used to predict the peristimulus time histogram (PSTH) of real neurons under arbitrary time-dependent input.

[22] For non-adaptive integrate-and-fire neurons, the interval distribution under constant stimulation can be calculated from stationary renewal theory.

[46][23] The voltage V(t) can be interpreted as the result of an integration of the differential equation of a leaky integrate-and-fire model coupled to an arbitrary number of spike-triggered adaptation variables.

They are mainly used for didactic reasons in teaching but are not considered valid neuron models for large-scale simulations or data fitting.

[64] The experimental support is weak, but the model is useful as a didactic tool to introduce dynamics of spike generation through phase plane analysis.

This makes the Hindmarsh–Rose neuron model very useful, because it is still simple, allows a good qualitative description of the many different firing patterns of the action potential, in particular bursting, observed in experiments.

[31] The models in this category were derived following experiments involving natural stimulation such as light, sound, touch, or odor.

[27] Importantly, it is possible to fit parameters of the age-dependent point process model so as to describe not just the PSTH response, but also the interspike-interval statistics.

Other predictions by this model include: 1) The averaged evoked response potential (ERP) due to the population of many neurons in unfiltered measurements resembles the firing rate.

This range of mediation produces the following current dynamics: where ḡ is the maximal[8][17] conductance (around 1S) and E is the equilibrium potential of the given ion or transmitter (AMDA, NMDA, Cl, or K), while [O] describes the fraction of open receptors.

The dynamics of this more complicated model have been well-studied experimentally and produce important results in terms of very quick synaptic potentiation and depression, that is fast, short-term learning.

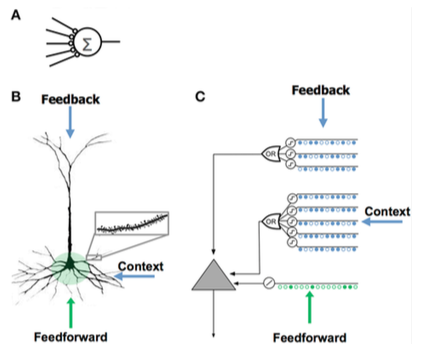

The HTM neuron model was developed by Jeff Hawkins and researchers at Numenta and is based on a theory called Hierarchical Temporal Memory, originally described in the book On Intelligence.

The neuron is bound by an insulating cell membrane and can maintain a concentration of charged ions on either side that determines a capacitance Cm.

[19] If the input current is constant, most neurons emit after some time of adaptation or initial bursting a regular spike train.

The frequency of regular firing in response to a constant current I is described by the frequency-current relation, which corresponds to the transfer function

Linear cable theory describes the dendritic arbor of a neuron as a cylindrical structure undergoing a regular pattern of bifurcation, like branches in a tree.

We can iterate these equations through the tree until we get the point where the dendrites connect to the cell body (soma), where the conductance ratio is Bin,stem.

[105][106] For static inputs, it is sometimes possible to reduce the number of compartments (increase the computational speed) and yet retain the salient electrical characteristics.