Cache replacement policies

When the cache is full, the algorithm must choose which items to discard to make room for new data.

The average memory reference time is[1] where A cache has two primary figures of merit: latency and hit ratio.

More efficient replacement policies track more usage information to improve the hit rate for a given cache size.

Faster replacement strategies typically track of less usage information—or, with a direct-mapped cache, no information—to reduce the time required to update the information.

The practical minimum can be calculated after experimentation, and the effectiveness of a chosen cache algorithm can be compared.

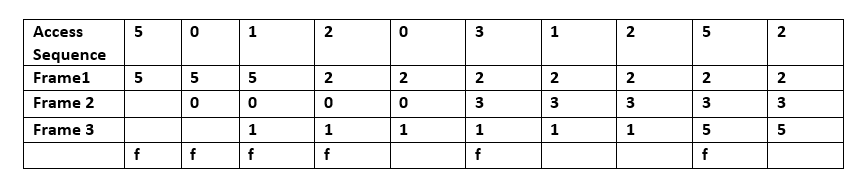

[4] With this algorithm, the cache behaves like a FIFO queue; it evicts blocks in the order in which they were added, regardless of how often or how many times they were accessed before.

SIEVE uses a single FIFO queue and uses a moving hand to select objects to evict.

The eviction hand points to the tail of the queue at the beginning and moves toward the head over time.

Compared with the CLOCK eviction algorithm, retained objects in SIEVE stay in the old position.

Time-aware, least-recently-used (TLRU)[9] is a variant of LRU designed for when the contents of a cache have a valid lifetime.

At the 11th VLDB conference, Chou and DeWitt said: "When a file is being repeatedly scanned in a [looping sequential] reference pattern, MRU is the best replacement algorithm.

"[10] Researchers presenting at the 22nd VLDB conference noted that for random access patterns and repeated scans over large datasets (also known as cyclic access patterns), MRU cache algorithms have more hits than LRU due to their tendency to retain older data.

The size limit of the protected segment is an SLRU parameter which varies according to I/O workload patterns.

When data must be discarded from the cache, lines are obtained from the LRU end of the probationary segment.

For CPU caches with large associativity (generally > four ways), the implementation cost of LRU becomes prohibitive.

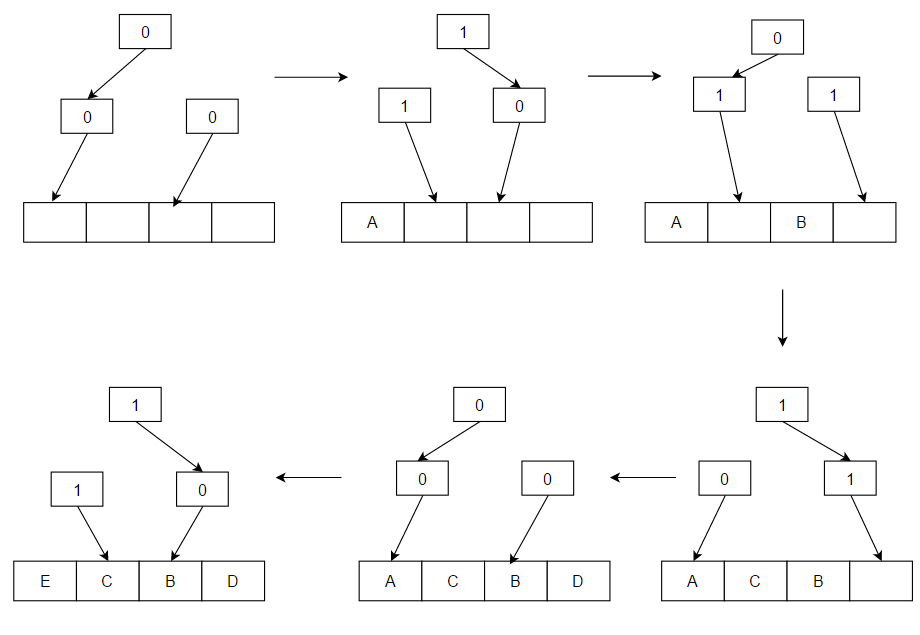

Bits work as a binary tree of one-bit pointers which point to a less-recently-used sub-tree.

[18] S3-FIFO demonstrates that FIFO queues are sufficient to design efficient and scalable eviction algorithms.

Besides, on web cache workloads, S3-FIFO achieves the lowest miss ratio among 11 state-of-the-art algorithms the authors compared with.

[20] RRIP[21] is a flexible policy, proposed by Intel, which attempts to provide good scan resistance while allowing older cache lines that have not been reused to be evicted.

The RRPV is usually high on insertion; if a line is not reused soon, it will be evicted to prevent scans (large amounts of data used only once) from filling the cache.

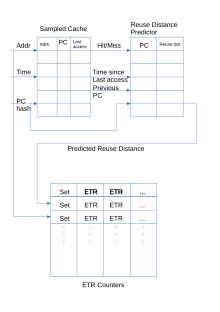

This allows the policy to determine which lines should have been cached and which should not, predicting whether an instruction is cache-friendly or cache-averse.

Mockingjay keeps a sampled cache of unique accesses, the PCs that produced them, and their timestamps.

When a line in the sampled cache is accessed again, the time difference will be sent to the reuse distance predictor.

A number of policies have attempted to use perceptrons, markov chains or other types of machine learning to predict which line to evict.

Reuse distance is a metric for dynamically ranking accessed pages to make a replacement decision.

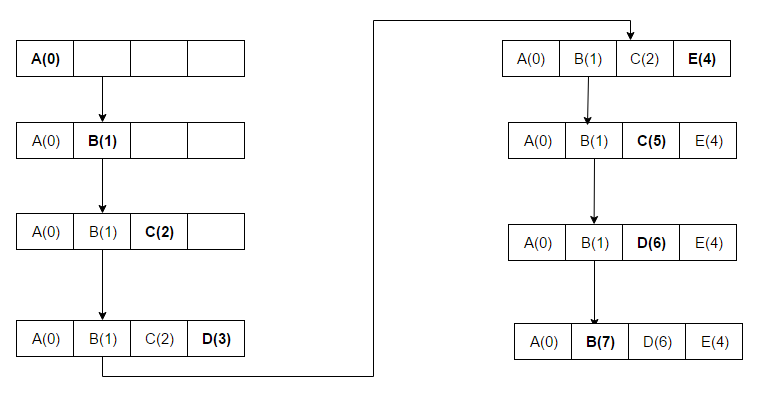

Adaptive replacement cache (ARC) constantly balances between LRU and LFU to improve the combined result.

[36] Pannier[37] is a container-based flash caching mechanism which identifies containers whose blocks have variable access patterns.

Static analysis determines which accesses are cache hits or misses to indicate the worst-case execution time of a program.

[39] This analysis can be refined to distinguish cases where the same program point is accessible by paths that result in misses or hits.

[40] An efficient analysis may be obtained by abstracting sets of cache states by antichains which are represented by compact binary decision diagrams.