Computer programming

Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit.

As early as the 9th century, a programmable music sequencer was invented by the Persian Banu Musa brothers, who described an automated mechanical flute player in the Book of Ingenious Devices.

[5][6] In 1801, the Jacquard loom could produce entirely different weaves by changing the "program" – a series of pasteboard cards with holes punched in them.

[7] The first computer program is generally dated to 1843 when mathematician Ada Lovelace published an algorithm to calculate a sequence of Bernoulli numbers, intended to be carried out by Charles Babbage's Analytical Engine.

Assembly languages were soon developed that let the programmer specify instructions in a text format (e.g., ADD X, TOTAL), with abbreviations for each operation code and meaningful names for specifying addresses.

High-level languages made the process of developing a program simpler and more understandable, and less bound to the underlying hardware.

Compilers harnessed the power of computers to make programming easier[16] by allowing programmers to specify calculations by entering a formula using infix notation.

[20] In computer programming, readability refers to the ease with which a human reader can comprehend the purpose, control flow, and operation of source code.

A study found that a few simple readability transformations made code shorter and drastically reduced the time to understand it.

Various visual programming languages have also been developed with the intent to resolve readability concerns by adopting non-traditional approaches to code structure and display.

The academic field and the engineering practice of computer programming are concerned with discovering and implementing the most efficient algorithms for a given class of problems.

For this purpose, algorithms are classified into orders using Big O notation, which expresses resource use—such as execution time or memory consumption—in terms of the size of an input.

The first step in most formal software development processes is requirements analysis, followed by testing to determine value modeling, implementation, and failure elimination (debugging).

For example, COBOL is still strong in corporate data centers[24] often on large mainframe computers, Fortran in engineering applications, scripting languages in Web development, and C in embedded software.

The choice of language used is subject to many considerations, such as company policy, suitability to task, availability of third-party packages, or individual preference.

Learning to program has a long history related to professional standards and practices, academic initiatives and curriculum, and commercial books and materials for students, self-taught learners, hobbyists, and others who desire to create or customize software for personal use.

[26] Through these social ideals and educational agendas, learning to code has become important not just for scientists and engineers, but for millions of citizens who have come to believe that creating software is beneficial to society and its members.

[27] Before the rise of the commercial Internet in the mid-1990s, most programmers learned about software construction through books, magazines, user groups, and informal instruction methods, with academic coursework and corporate training playing important roles for professional workers.

The book offered a selection of common subroutines for handling basic operations on the EDSAC, one of the world's first stored-program computers.

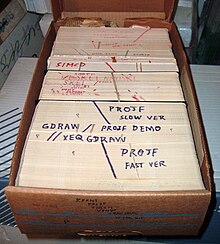

These languages included FLOW-MATIC, COBOL, FORTRAN, ALGOL, Pascal, BASIC, and C. An example of an early programming primer from these years is Marshal H. Wrubel's A Primer of Programming for Digital Computers (1959), which included step-by-step instructions for filling out coding sheets, creating punched cards, and using the keywords in IBM's early FORTRAN system.

In 1961, Alan Perlis suggested that all university freshmen at Carnegie Technical Institute take a course in computer programming.

[30] His advice was published in the popular technical journal Computers and Automation, which became a regular source of information for professional programmers.

A sample of these learning resources includes BASIC Computer Games, Microcomputer Edition (1978), by David Ahl; Programming the Z80 (1979), by Rodnay Zaks; Programmer's CP/M Handbook (1983), by Andy Johnson-Laird; C Primer Plus (1984), by Mitchell Waite and The Waite Group; The Peter Norton Programmer's Guide to the IBM PC (1985), by Peter Norton; Advanced MS-DOS (1986), by Ray Duncan; Learn BASIC Now (1989), by Michael Halvorson and David Rygymr; Programming Windows (1992 and later), by Charles Petzold; Code Complete: A Practical Handbook for Software Construction (1993), by Steve McConnell; and Tricks of the Game-Programming Gurus (1994), by André LaMothe.

Between 2000 and 2010, computer book and magazine publishers declined significantly as providers of programming instruction, as programmers moved to Internet resources to expand their access to information.

New commercial resources included YouTube videos, Lynda.com tutorials (later LinkedIn Learning), Khan Academy, Codecademy, GitHub, and numerous coding bootcamps.

Commercial and non-profit organizations published learning websites for developers, created blogs, and established newsfeeds and social media resources about programming.