Deep reinforcement learning

RL considers the problem of a computational agent learning to make decisions by trial and error.

Deep reinforcement learning has been used for a diverse set of applications including but not limited to robotics, video games, natural language processing, computer vision,[1] education, transportation, finance and healthcare.

Deep learning methods, often using supervised learning with labeled datasets, have been shown to solve tasks that involve handling complex, high-dimensional raw input data (such as images) with less manual feature engineering than prior methods, enabling significant progress in several fields including computer vision and natural language processing.

This problem is often modeled mathematically as a Markov decision process (MDP), where an agent at every timestep is in a state

, or map from observations to actions, in order to maximize its returns (expected sum of rewards).

of the MDP are high-dimensional (e.g., images from a camera or the raw sensor stream from a robot) and cannot be solved by traditional RL algorithms.

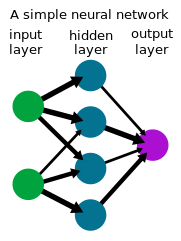

or other learned functions as a neural network and developing specialized algorithms that perform well in this setting.

Because in such a system, the entire decision making process from sensors to motors in a robot or agent involves a single neural network, it is also sometimes called end-to-end reinforcement learning.

[4] One of the first successful applications of reinforcement learning with neural networks was TD-Gammon, a computer program developed in 1992 for playing backgammon.

With zero knowledge built in, the network learned to play the game at an intermediate level by self-play and TD(

Seminal textbooks by Sutton and Barto on reinforcement learning,[6] Bertsekas and Tsitiklis on neuro-dynamic programming,[7] and others[8] advanced knowledge and interest in the field.

Katsunari Shibata's group showed that various functions emerge in this framework,[9][10][11] including image recognition, color constancy, sensor motion (active recognition), hand-eye coordination and hand reaching movement, explanation of brain activities, knowledge transfer, memory,[12] selective attention, prediction, and exploration.

Beginning around 2013, DeepMind showed impressive learning results using deep RL to play Atari video games.

They used a deep convolutional neural network to process 4 frames RGB pixels (84x84) as inputs.

[15] Deep reinforcement learning reached another milestone in 2015 when AlphaGo,[16] a computer program trained with deep RL to play Go, became the first computer Go program to beat a human professional Go player without handicap on a full-sized 19×19 board.

[17] Separately, another milestone was achieved by researchers from Carnegie Mellon University in 2019 developing Pluribus, a computer program to play poker that was the first to beat professionals at multiplayer games of no-limit Texas hold 'em.

OpenAI Five, a program for playing five-on-five Dota 2 beat the previous world champions in a demonstration match in 2019.

[19][20] Deep RL has also found sustainability applications, used to reduce energy consumption at data centers.

[21] Deep RL for autonomous driving is an active area of research in academia and industry.

[23] Various techniques exist to train policies to solve tasks with deep reinforcement learning algorithms, each having their own benefits.

RL agents usually collect data with some type of stochastic policy, such as a Boltzmann distribution in discrete action spaces or a Gaussian distribution in continuous action spaces, inducing basic exploration behavior.

This is done by "modify[ing] the loss function (or even the network architecture) by adding terms to incentivize exploration".

At the extreme, offline (or "batch") RL considers learning a policy from a fixed dataset without additional interaction with the environment.

[33] Hindsight experience replay is a method for goal-conditioned RL that involves storing and learning from previous failed attempts to complete a task.

[34] While a failed attempt may not have reached the intended goal, it can serve as a lesson for how achieve the unintended result through hindsight relabeling.

With this layer of abstraction, deep reinforcement learning algorithms can be designed in a way that allows them to be general and the same model can be used for different tasks.