Mathematical economics

[3] Mathematics allows economists to form meaningful, testable propositions about wide-ranging and complex subjects which could less easily be expressed informally.

Then, mainly in German universities, a style of instruction emerged which dealt specifically with detailed presentation of data as it related to public administration.

At the same time, a small group of professors in England established a method of "reasoning by figures upon things relating to government" and referred to this practice as Political Arithmetick.

[9] Sir William Petty wrote at length on issues that would later concern economists, such as taxation, Velocity of money and national income, but while his analysis was numerical, he rejected abstract mathematical methodology.

More importantly, until Johann Heinrich von Thünen's The Isolated State in 1826, economists did not develop explicit and abstract models for behavior in order to apply the tools of mathematics.

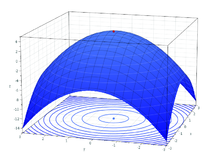

[30] He adopted Jeremy Bentham's felicific calculus to economic behavior, allowing the outcome of each decision to be converted into a change in utility.

[40] Pareto's proof is commonly conflated with Walrassian equilibrium or informally ascribed to Adam Smith's Invisible hand hypothesis.

In the landmark treatise Foundations of Economic Analysis (1947), Paul Samuelson identified a common paradigm and mathematical structure across multiple fields in the subject, building on previous work by Alfred Marshall.

For his model of an expanding economy, von Neumann proved the existence and uniqueness of an equilibrium using his generalization of Brouwer's fixed point theorem.

[59] Optimality properties for an entire market system may be stated in mathematical terms, as in formulation of the two fundamental theorems of welfare economics[61] and in the Arrow–Debreu model of general equilibrium (also discussed below).

Economists who conducted research in nonlinear programming also have won the Nobel prize, notably Ragnar Frisch in addition to Kantorovich, Hurwicz, Koopmans, Arrow, and Samuelson.

[8][44][76] Following von Neumann's program, Kenneth Arrow and Gérard Debreu formulated abstract models of economic equilibria using convex sets and fixed–point theory.

[78] In Russia, the mathematician Leonid Kantorovich developed economic models in partially ordered vector spaces, that emphasized the duality between quantities and prices.

[78][80][81] Even in finite dimensions, the concepts of functional analysis have illuminated economic theory, particularly in clarifying the role of prices as normal vectors to a hyperplane supporting a convex set, representing production or consumption possibilities.

Moreover, differential calculus has returned to the highest levels of mathematical economics, general equilibrium theory (GET), as practiced by the "GET-set" (the humorous designation due to Jacques H. Drèze).

[86][87] These advances have changed the traditional narrative of the history of mathematical economics, following von Neumann, which celebrated the abandonment of differential calculus.

For example, cooperative game theory was used in designing the water distribution system of Southern Sweden and for setting rates for dedicated telephone lines in the US.

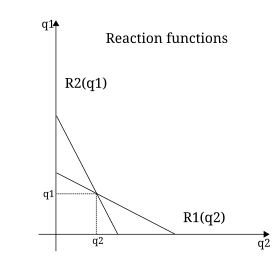

Earlier neoclassical theory had bounded only the range of bargaining outcomes and in special cases, for example bilateral monopoly or along the contract curve of the Edgeworth box.

Following von Neumann's program, however, John Nash used fixed–point theory to prove conditions under which the bargaining problem and noncooperative games can generate a unique equilibrium solution.

[94] It has also given rise to the subject of mechanism design (sometimes called reverse game theory), which has private and public-policy applications as to ways of improving economic efficiency through incentives for information sharing.

[100] Starting from specified initial conditions, the computational economic system is modeled as evolving over time as its constituent agents repeatedly interact with each other.

[101] In contrast to other standard modeling methods, ACE events are driven solely by initial conditions, whether or not equilibria exist or are computationally tractable.

[120][121] A student of Frisch's, Trygve Haavelmo published The Probability Approach in Econometrics in 1944, where he asserted that precise statistical analysis could be used as a tool to validate mathematical theories about economic actors with data from complex sources.

Alfred Marshall argued that every economic problem which can be quantified, analytically expressed and solved, should be treated by means of mathematical work.

Graduate programs in both economics and finance require strong undergraduate preparation in mathematics for admission and, for this reason, attract an increasingly high number of mathematicians.

For example, during the discussion of the efficacy of a corporate tax cut for increasing the wages of workers, a simple mathematical model proved beneficial to understanding the issues at hand.

[130] Friedrich Hayek contended that the use of formal techniques projects a scientific exactness that does not appropriately account for informational limitations faced by real economic agents.

[131] In an interview in 1999, the economic historian Robert Heilbroner stated:[132] I guess the scientific approach began to penetrate and soon dominate the profession in the past twenty to thirty years.

Keynes wrote in The General Theory:[137] It is a great fault of symbolic pseudo-mathematical methods of formalising a system of economic analysis ... that they expressly assume strict independence between the factors involved and lose their cogency and authority if this hypothesis is disallowed; whereas, in ordinary discourse, where we are not blindly manipulating and know all the time what we are doing and what the words mean, we can keep ‘at the back of our heads’ the necessary reserves and qualifications and the adjustments which we shall have to make later on, in a way in which we cannot keep complicated partial differentials ‘at the back’ of several pages of algebra which assume they all vanish.

Too large a proportion of recent ‘mathematical’ economics are merely concoctions, as imprecise as the initial assumptions they rest on, which allow the author to lose sight of the complexities and interdependencies of the real world in a maze of pretentious and unhelpful symbols.In response to these criticisms, Paul Samuelson argued that mathematics is a language, repeating a thesis of Josiah Willard Gibbs.