External memory algorithm

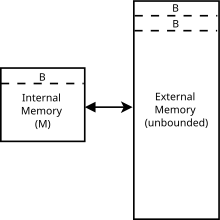

Both the internal and external memory are divided into blocks of size B.

One input/output or memory transfer operation consists of moving a block of B contiguous elements from external to internal memory, and the running time of an algorithm is determined by the number of these input/output operations.

[4] Algorithms in the external memory model take advantage of the fact that retrieving one object from external memory retrieves an entire block of size B.

Searching for an element among N objects is possible in the external memory model using a B-tree with branching factor B.

Information theoretically, this is the minimum running time possible for these operations, so using a B-tree is asymptotically optimal.

This bound also applies to the fast Fourier transform in the external memory model.

The model is also useful for analyzing algorithms that work on datasets too big to fit in internal memory.

[4] A typical example is geographic information systems, especially digital elevation models, where the full data set easily exceeds several gigabytes or even terabytes of data.

This methodology extends beyond general purpose CPUs and also includes GPU computing as well as classical digital signal processing.

In general-purpose computing on graphics processing units (GPGPU), powerful graphics cards (GPUs) with little memory (compared with the more familiar system memory, which is most often referred to simply as RAM) are utilized with relatively slow CPU-to-GPU memory transfer (when compared with computation bandwidth).

An early use of the term "out-of-core" as an adjective is in 1962 in reference to devices that are other than the core memory of an IBM 360.