Face perception

[2] Information gathered from the face helps people understand each other's identity, what they are thinking and feeling, anticipate their actions, recognize their emotions, build connections, and communicate through body language.

Being able to perceive identity, mood, age, sex, and race lets people mold the way we interact with one another, and understand our immediate surroundings.

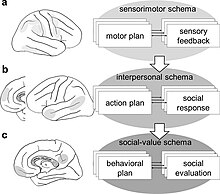

One of the most widely accepted theories of face perception argues that understanding faces involves several stages:[7] from basic perceptual manipulations on the sensory information to derive details about the person (such as age, gender or attractiveness), to being able to recall meaningful details such as their name and any relevant past experiences of the individual.

This model, developed by Vicki Bruce and Andrew Young in 1986, argues that face perception involves independent sub-processes working in unison.

A wide variety of distortions can occur — features can droop, enlarge, become discolored, or the entire face can appear to shift relative to the head.

In half of the reported cases, distortions are restricted to either the left or the right side of the face, and this form of PMO is called hemi-prosopometamorphopsia (hemi-PMO).

As stated earlier, research on the impairments caused by brain injury or neurological illness has helped develop our understanding of cognitive processes.

Novel optical illusions such as the flashed face distortion effect, in which scientific phenomenology outpaces neurological theory, also provide areas for research.

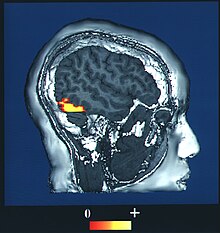

[41][42][43][44][45][46] However, some studies show increased activation in one side over the other: for instance, the right fusiform gyrus is more important for facial processing in complex situations.

[35] The majority of fMRI studies use blood oxygen level dependent (BOLD) contrast to determine which areas of the brain are activated by various cognitive functions.

[62] Cognitive neuroscientists Isabel Gauthier and Michael Tarr are two of the major proponents of the view that face recognition involves expert discrimination of similar objects.

[63] Other scientists, in particular Nancy Kanwisher and her colleagues, argue that face recognition involves processes that are face-specific and that are not recruited by expert discriminations in other object classes.

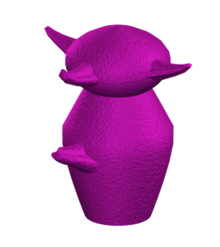

[64] Studies by Gauthier have shown that an area of the brain known as the fusiform gyrus (sometimes called the fusiform face area because it is active during face recognition) is also active when study participants are asked to discriminate between different types of birds and cars,[65] and even when participants become expert at distinguishing computer generated nonsense shapes known as greebles.

[71] fMRI studies have asked whether expertise has any specific connection to the fusiform face area in particular, by testing for expertise effects in both the fusiform face area and a nearby but not face-selective region called LOC (Rhodes et al., JOCN 2004; Op de Beeck et al., JN 2006; Moore et al., JN 2006; Yue et al. VR 2006).

The experiment showed two groups of celebrity and familiar faces or voices with a between-group design and asked the participants to recall information about them.

[77] In order to eliminate these errors, experimenters removed parts of the voice samples that could possibly give clues to the identity of the target, such as catchphrases.

[80] However, researchers over the years have found an even more effective way to control not only the frequency of exposure but also the content of the speech extracts, the associative learning paradigm.

[83][85] Again, the results showed that semantic information can be more accessible to retrieve when individuals are recognizing faces than voices even when the frequency of exposure was controlled.

[96] In facial perception there was no association to estimated intelligence, suggesting that face recognition in women is unrelated to several basic cognitive processes.

This agrees with the suggestion made by Gauthier in 2000, that the extrastriate cortex contains areas that are best suited for different computations, and described as the process-map model.

[118] D. Stephen Lindsay and colleagues note that results in these studies could be due to intrinsic difficulty in recognizing the faces presented, an actual difference in the size of cross-race effect between the two test groups, or some combination of these two factors.

[125] The own-race effect appears related to increased ability to extract information about the spatial relationships between different facial features.

[134] Autism often manifests in weakened social ability, due to decreased eye contact, joint attention, interpretation of emotional expression, and communicative skills.

[144] Furthermore, research suggests a link between decreased face processing abilities in individuals with autism and later deficits in theory of mind.

[146] As autistic individuals age, scores on behavioral tests assessing ability to perform face-emotion recognition increase to levels similar to controls.

[151][91] People with schizophrenia tend to demonstrate a reduced N170 response, atypical face scanning patterns, and a configural processing dysfunction.

This work has occurred in a branch of artificial intelligence known as computer vision, which uses the psychology of face perception to inform software design.

A 2003 study found significantly poorer facial recognition abilities in individuals with Turner syndrome, suggesting that the amygdala impacts face perception.

[161] Research suggests that more extreme examples of facial recognition abilities, specifically hereditary prosopagnosics, are highly genetically correlated.

[165] Research also correlated the probability of hereditary prosopagnosia with the single nucleotide polymorphisms[164] along the oxytocin receptor gene (OXTR), suggesting that these alleles serve a critical role in normal face perception.