Generalized distributive law

[1] It is a synthesis of the work of many authors in the information theory, digital communications, signal processing, statistics, and artificial intelligence communities.

The law and algorithm were introduced in a semi-tutorial by Srinivas M. Aji and Robert J. McEliece with the same title.

[2] As it can be observed from the definition, application of distributive law to an arithmetic expression reduces the number of operations in it.

In the previous example the total number of operations reduced from three (two multiplications and an addition in

Generalization of distributive law leads to a large family of fast algorithms.

This shows by an example that applying distributive law reduces the computational complexity which is one of the good features of a "fast algorithm".

Some of the problems that used distributive law to solve can be grouped as follows: MPF or marginalize a product function is a general computational problem which as special case includes many classical problems such as computation of discrete Hadamard transform, maximum likelihood decoding of a linear code over a memory-less channel, and matrix chain multiplication.

The power of the GDL lies in the fact that it applies to situations in which additions and multiplications are generalized.

, then one can solve the MPF problem basing on the notion of belief propagation which is a special use of "message passing" technique.

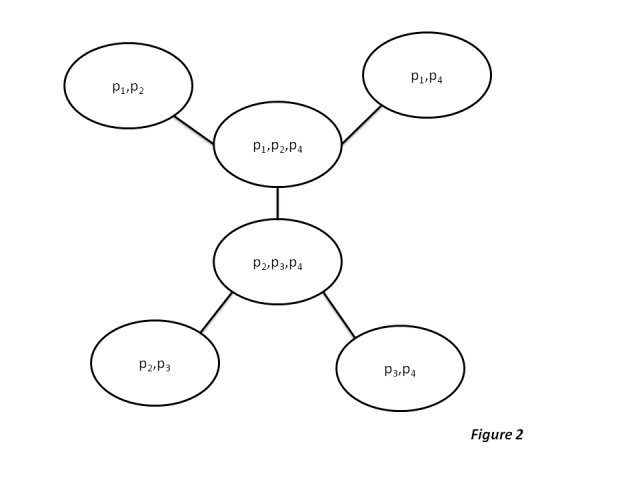

The required relationship is that the given set of local domains can be organised into a junction tree.

But however, if add the two dummy domains as shown below then organizing the updated set into a junction tree would be possible and easy too.

Output: For the given set of domains, possible minimum number of operations that is required to solve the problem is computed.

Similarly each vertex has a state which is defined as a table containing the values from the function

gets updated, it follows the following equation: For the given set of local domains as input, we find out if we can create a junction tree, either by using the set directly or by adding dummy domains to the set first and then creating the junction tree, if construction junction is not possible then algorithm output that there is no way to reduce the number of steps to compute the given equation problem, but once we have junction tree, algorithm will have to schedule messages and compute states, by doing these we can know where steps can be reduced, hence will be discusses this below.

Lets begin with the single-vertex problem, GDL will start by directing each edge towards the targeted vertex

The messages are started from the leaf nodes(where the degree is 1) go up towards the target vertex

To solve the All-Vertices problem, we can schedule GDL in several ways, some of them are parallel implementation where in each round, every state is updated and every message is computed and transmitted at the same time.

In this type of implementation the states and messages will stabilizes after number of rounds that is at most equal to the diameter of the tree.

Another way to schedule GDL for this problem is serial implementation where its similar to the Single vertex problem except that we don't stop the algorithm until all the vertices of a required set have not got all the messages from all their neighbors and have compute their state.

The key to constructing a junction tree lies in the local domain graph

, the corresponding message trellis as a finite directed graph with Vertex set of

Here we try to explain the complexity of solving the MPF problem in terms of the number of mathematical operations required for the calculation.

Similar to the above explained example we will be expressing the equations in different forms to perform as few operation as possible by applying the GDL.

As explained in the previous sections we solve the problem by using the concept of the junction trees.

When this process is propagated up the tree the minimum of the group of elements will be found at the root.

The following is the complexity for solving the junction tree using message passing We rewrite the formula used earlier to the following form.

Now we consider the all-vertex problem where the message will have to be sent in both the directions and state must be computed at both the vertexes.

and sometimes this might mean adding a local domain to lower the junction tree complexity.

But even in cases where there are cycles and a number of iterations the messages will approximately be equal to the objective function.

The experiments on Gallager–Tanner–Wiberg algorithm for low density parity-check codes were supportive of this claim.