Group method of data handling

Group method of data handling (GMDH) is a family of inductive algorithms for computer-based mathematical modeling of multi-parametric datasets that features fully automatic structural and parametric optimization of models.

GMDH is used in such fields as data mining, knowledge discovery, prediction, complex systems modeling, optimization and pattern recognition.

[1] GMDH algorithms are characterized by inductive procedure that performs sorting-out of gradually complicated polynomial models and selecting the best solution by means of the external criterion.

Other names include "polynomial feedforward neural network",[3] or "self-organization of models".

We now run the models on the training dataset, to obtain a sequence of transformed observations:

is smaller than the previous one, the process continues, giving us increasingly deep models.

For example, one might keep running the algorithm for several more steps, in the hope of passing a temporary rise in

Instead of a degree-2 polynomial in 2 variables, each unit may use higher-degree polynomials in more variables:[1] And more generally, a GMDH model with multiple inputs and one output is a subset of components of the base function (1): where fi are elementary functions dependent on different sets of inputs, ai are coefficients and m is the number of the base function components.

External criteria are optimization objectives for the model, such as minimizing mean-squared error on the validation set, as given above.

The most common criteria are: Like linear regression, which fits a linear equation over data, GMDH fits arbitrarily high orders of polynomial equations over data.

[6][7] To choose between models, two or more subsets of a data sample are used, similar to the train-validation-test split.

GMDH combined ideas from:[8] black box modeling, successive genetic selection of pairwise features,[9] the Gabor's principle of "freedom of decisions choice",[10] and the Beer's principle of external additions.

The model is structured as a feedforward neural network, but without restrictions on the depth, they had a procedure for automatic models structure generation, which imitates the process of biological selection with pairwise genetic features.

The method was originated in 1968 by Prof. Alexey G. Ivakhnenko in the Institute of Cybernetics in Kyiv.

Period 1968–1971 is characterized by application of only regularity criterion for solving of the problems of identification, pattern recognition and short-term forecasting.

As reference functions polynomials, logical nets, fuzzy Zadeh sets and Bayes probability formulas were used.

The problem of modeling of noised data and incomplete information basis was solved.

Multicriteria selection and utilization of additional priory information for noiseimmunity increasing were proposed.

Best experiments showed that with extended definition of the optimal model by additional criterion noise level can be ten times more than signal.

In 1977 a solution of objective systems analysis problems by multilayered GMDH algorithms was proposed.

It turned out that sorting-out by criteria ensemble finds the only optimal system of equations and therefore to show complex object elements, their main input and output variables.

Since 1989 the new algorithms (AC, OCC, PF) for non-parametric modeling of fuzzy objects and SLP for expert systems were developed and investigated.

[13] Present stage of GMDH development can be described as blossom out of deep learning neuronets and parallel inductive algorithms for multiprocessor computers.

The very first consideration order used in GMDH and originally called multilayered inductive procedure is the most popular one.

Multilayered procedure is equivalent to the Artificial Neural Network with polynomial activation function of neurons.

Li showed that GMDH-type neural network performed better than the classical forecasting algorithms such as Single Exponential Smooth, Double Exponential Smooth, ARIMA and back-propagation neural network.

[15] Another important approach to partial models consideration that becomes more and more popular is a combinatorial search that is either limited or full.

This approach has some advantages against Polynomial Neural Networks, but requires considerable computational power and thus is not effective for objects with a large number of inputs.

An important achievement of Combinatorial GMDH is that it fully outperforms linear regression approach if noise level in the input data is greater than zero.

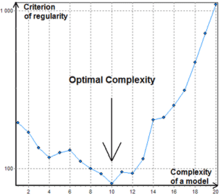

Basic Combinatorial algorithm makes the following steps: In contrast to GMDH-type neural networks Combinatorial algorithm usually does not stop at the certain level of complexity because a point of increase of criterion value can be simply a local minimum, see Fig.1.