Cross-validation (statistics)

Cross-validation,[2][3][4] sometimes called rotation estimation[5][6][7] or out-of-sample testing, is any of various similar model validation techniques for assessing how the results of a statistical analysis will generalize to an independent data set.

[8][9] The goal of cross-validation is to test the model's ability to predict new data that was not used in estimating it, in order to flag problems like overfitting or selection bias[10] and to give an insight on how the model will generalize to an independent dataset (i.e., an unknown dataset, for instance from a real problem).

(Cross-validation in the context of linear regression is also useful in that it can be used to select an optimally regularized cost function.)

Cross-validation is, thus, a generally applicable way to predict the performance of a model on unavailable data using numerical computation in place of theoretical analysis.

passes may still require quite a large computation time, in which case other approaches such as k-fold cross validation may be more appropriate.

[14] Pseudo-code algorithm: Input: x, {vector of length N with x-values of incoming points} y, {vector of length N with y-values of the expected result} interpolate( x_in, y_in, x_out ), { returns the estimation for point x_out after the model is trained with x_in-y_in pairs} Output: err, {estimate for the prediction error} Steps: Non-exhaustive cross validation methods do not compute all ways of splitting the original sample.

In k-fold cross-validation, the original sample is randomly partitioned into k equal sized subsamples, often referred to as "folds".

In the case of binary classification, this means that each partition contains roughly the same proportions of the two types of class labels.

Similarly, indicators of the specific role played by various predictor variables (e.g., values of regression coefficients) will tend to be unstable.

[6][20] This method, also known as Monte Carlo cross-validation,[21][22] creates multiple random splits of the dataset into training and validation data.

This method also exhibits Monte Carlo variation, meaning that the results will vary if the analysis is repeated with different random splits.

In a stratified variant of this approach, the random samples are generated in such a way that the mean response value (i.e. the dependent variable in the regression) is equal in the training and testing sets.

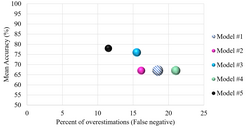

For example, for binary classification problems, each case in the validation set is either predicted correctly or incorrectly.

In this situation the misclassification error rate can be used to summarize the fit, although other measures derived from information (e.g., counts, frequency) contained within a contingency table or confusion matrix could also be used.

In this way, they can attempt to counter the volatility of cross-validation when the sample size is small and include relevant information from previous research.

Since a simple equal-weighted forecast is difficult to beat, a penalty can be added for deviating from equal weights.

can be defined so that a user can intuitively balance between the accuracy of cross-validation and the simplicity of sticking to a reference parameter

candidate configuration that might be selected, then the loss function that is to be minimized can be defined as Relative accuracy can be quantified as

If we imagine sampling multiple independent training sets following the same distribution, the resulting values for F* will vary.

Some progress has been made on constructing confidence intervals around cross-validation estimates,[26] but this is considered a difficult problem.

In some cases such as least squares and kernel regression, cross-validation can be sped up significantly by pre-computing certain values that are needed repeatedly in the training, or by using fast "updating rules" such as the Sherman–Morrison formula.

However one must be careful to preserve the "total blinding" of the validation set from the training procedure, otherwise bias may result.

In many applications of predictive modeling, the structure of the system being studied evolves over time (i.e. it is "non-stationary").

As another example, suppose a model is developed to predict an individual's risk for being diagnosed with a particular disease within the next year.

If the model is trained using data from a study involving only a specific population group (e.g. young people or males), but is then applied to the general population, the cross-validation results from the training set could differ greatly from the actual predictive performance.

The reason for the success of the swapped sampling is a built-in control for human biases in model building.

[33] However, if performance is described by a single summary statistic, it is possible that the approach described by Politis and Romano as a stationary bootstrap[34] will work.

Using cross-validation, we can obtain empirical estimates comparing these two methods in terms of their respective fractions of misclassified characters.

[36] Suppose we are using the expression levels of 20 proteins to predict whether a cancer patient will respond to a drug.

However under cross-validation, the model with the best fit will generally include only a subset of the features that are deemed truly informative.