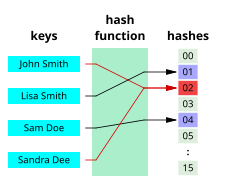

Hash function

Hash functions rely on generating favorable probability distributions for their effectiveness, reducing access time to nearly constant.

A necessary adjunct to the hash function is a collision-resolution method that employs an auxiliary data structure like linked lists, or systematic probing of the table to find an empty slot.

A cache is generally simpler than a hashed search table, since any collision can be resolved by discarding or writing back the older of the two colliding items.

[4] Hash functions are an essential ingredient of the Bloom filter, a space-efficient probabilistic data structure that is used to test whether an element is a member of a set.

This principle is widely used in computer graphics, computational geometry, and many other disciplines, to solve many proximity problems in the plane or in three-dimensional space, such as finding closest pairs in a set of points, similar shapes in a list of shapes, similar images in an image database, and so on.

[5] A good hash function should map the expected inputs as evenly as possible over its output range.

The reason for this last requirement is that the cost of hashing-based methods goes up sharply as the number of collisions—pairs of inputs that are mapped to the same hash value—increases.

A ratio within one confidence interval (such as 0.95 to 1.05) is indicative that the hash function evaluated has an expected uniform distribution.

This requirement excludes hash functions that depend on external variable parameters, such as pseudo-random number generators or the time of day.

A common solution is to compute a fixed hash function with a very large range (say, 0 to 232 − 1), divide the result by n, and use the division's remainder.

When this approach is used, the hash function must be chosen so that the result has fairly uniform distribution between 0 and n − 1, for any value of n that may occur in the application.

A mid-squares hash code is produced by squaring the input and extracting an appropriate number of middle digits or bits.

The mid-squares method produces a reasonable hash code if there is not a lot of leading or trailing zeros in the key.

A standard technique is to use a modulo function on the key, by selecting a divisor M which is a prime number close to the table size, so h(K) ≡ K (mod M).

This is special because arithmetic modulo 2w is done by default in low-level programming languages and integer division by a power of 2 is simply a right-shift, so, in C, for example, this function becomes and for fixed m and w this translates into a single integer multiplication and right-shift, making it one of the fastest hash functions to compute.

[12] A transmutation on the input which shifts the span of retained top bits down and XORs or ADDs them to the key before the multiplication step corrects for this.

[13] Zobrist hashing was originally introduced as a means of compactly representing chess positions in computer game-playing programs.

A unique random number was assigned to represent each type of piece (six each for black and white) on each space of the board.

Later, the method was extended to hashing integers by representing each byte in each of 4 possible positions in the word by a unique 32-bit random number.

This kind of function has some nice theoretical properties, one of which is called 3-tuple independence, meaning that every 3-tuple of keys is equally likely to be mapped to any 3-tuple of hash values.

Selected divisors or multipliers in the division and multiplicative schemes may make more uniform hash functions if the keys are cyclic or have other redundancies.

When the data values are long (or variable-length) character strings—such as personal names, web page addresses, or mail messages—their distribution is usually very uneven, with complicated dependencies.

A better idea is to multiply the hash total by a constant, typically a sizable prime number, before adding in the next character, ignoring overflow.

The final operation would be a modulo, mask, or other function to reduce the word value to an index the size of the table.

Modern microprocessors will allow for much faster processing if 8-bit character strings are not hashed by processing one character at a time, but by interpreting the string as an array of 32-bit or 64-bit integers and hashing/accumulating these "wide word" integer values by means of arithmetic operations (e.g. multiplication by constant and bit-shifting).

The final word, which may have unoccupied byte positions, is filled with zeros or a specified randomizing value before being folded into the hash.

The accumulated hash code is reduced by a final modulo or other operation to yield an index into the table.

The practical worst case is the expected longest probe sequence (hash function + collision resolution method).

While Knuth worries about adversarial attack on real time systems,[24] Gonnet has shown that the probability of such a case is "ridiculously small".

[26]: 514 In his research for the precise origin of the term, Donald Knuth notes that, while Hans Peter Luhn of IBM appears to have been the first to use the concept of a hash function in a memo dated January 1953, the term itself did not appear in published literature until the late 1960s, in Herbert Hellerman's Digital Computer System Principles, even though it was already widespread jargon by then.