Hill climbing

In numerical analysis, hill climbing is a mathematical optimization technique which belongs to the family of local search.

For example, hill climbing can be applied to the travelling salesman problem.

The algorithm starts with such a solution and makes small improvements to it, such as switching the order in which two cities are visited.

It is used widely in artificial intelligence, for reaching a goal state from a starting node.

Although more advanced algorithms such as simulated annealing or tabu search may give better results, in some situations hill climbing works just as well.

Hill climbing can often produce a better result than other algorithms when the amount of time available to perform a search is limited, such as with real-time systems, so long as a small number of increments typically converges on a good solution (the optimal solution or a close approximation).

At the other extreme, bubble sort can be viewed as a hill climbing algorithm (every adjacent element exchange decreases the number of disordered element pairs), yet this approach is far from efficient for even modest N, as the number of exchanges required grows quadratically.

Hill climbing is an anytime algorithm: it can return a valid solution even if it's interrupted at any time before it ends.

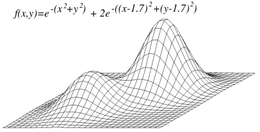

Hill climbing attempts to maximize (or minimize) a target function

is accepted, and the process continues until no change can be found to improve the value of

Both forms fail if there is no closer node, which may happen if there are local maxima in the search space which are not solutions.

Steepest ascent hill climbing is similar to best-first search, which tries all possible extensions of the current path instead of only one.

[2] Stochastic hill climbing does not examine all neighbors before deciding how to move.

Random-restart hill climbing is a surprisingly effective algorithm in many cases.

It turns out that it is often better to spend CPU time exploring the space, than carefully optimizing from an initial condition.

However, as many functions are not convex hill climbing may often fail to reach a global maximum.

Other local search algorithms try to overcome this problem such as stochastic hill climbing, random walks and simulated annealing.

Ridges are a challenging problem for hill climbers that optimize in continuous spaces.

Because hill climbers only adjust one element in the vector at a time, each step will move in an axis-aligned direction.

If the target function creates a narrow ridge that ascends in a non-axis-aligned direction (or if the goal is to minimize, a narrow alley that descends in a non-axis-aligned direction), then the hill climber can only ascend the ridge (or descend the alley) by zig-zagging.

If the sides of the ridge (or alley) are very steep, then the hill climber may be forced to take very tiny steps as it zig-zags toward a better position.

By contrast, gradient descent methods can move in any direction that the ridge or alley may ascend or descend.