Metaheuristic

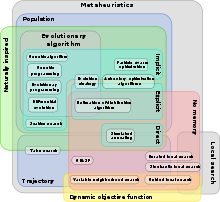

In computer science and mathematical optimization, a metaheuristic is a higher-level procedure or heuristic designed to find, generate, tune, or select a heuristic (partial search algorithm) that may provide a sufficiently good solution to an optimization problem or a machine learning problem, especially with incomplete or imperfect information or limited computation capacity.

[1][5][6] Their use is always of interest when exact or other (approximate) methods are not available or are not expedient, either because the calculation time is too long or because, for example, the solution provided is too imprecise.

[4] Many metaheuristics implement some form of stochastic optimization, so that the solution found is dependent on the set of random variables generated.

[14] Most literature on metaheuristics is experimental in nature, describing empirical results based on computer experiments with the algorithms.

Many metaheuristic ideas were proposed to improve local search heuristic in order to find better solutions.

On the other hand, Memetic algorithms[30] represent the synergy of evolutionary or any population-based approach with separate individual learning or local improvement procedures for problem search.

A large number of more recent metaphor-inspired metaheuristics have started to attract criticism in the research community for hiding their lack of novelty behind an elaborate metaphor.

[16][17][25][34] As a result, a number of renowned scientists of the field have proposed a research agenda for the standardization of metaheuristics in order to make them more comparable, among other things.

[5][46] In practice, restrictions often have to be observed, e.g. by limiting the permissible sequence of work steps of a job through predefined workflows[47] and/or with regard to resource utilisation, e.g. in the form of smoothing the energy demand.

[55][56][57] An example of the mixture of combinatorial and continuous optimization is the planning of favourable motion paths for industrial robots.

[58][59] A MOF can be defined as ‘‘a set of software tools that provide a correct and reusable implementation of a set of metaheuristics, and the basic mechanisms to accelerate the implementation of its partner subordinate heuristics (possibly including solution encodings and technique-specific operators), which are necessary to solve a particular problem instance using techniques provided’’.

The following list of 33 MOFs is compared and evaluated in detail in:[60] Comet, EvA2, evolvica, Evolutionary::Algorithm, GAPlayground, jaga, JCLEC, JGAP, jMetal, n-genes, Open Beagle, Opt4j, ParadisEO/EO, Pisa, Watchmaker, FOM, Hypercube, HotFrame, Templar, EasyLocal, iOpt, OptQuest, JDEAL, Optimization Algorithm Toolkit, HeuristicLab, MAFRA, Localizer, GALIB, DREAM, Discropt, MALLBA, MAGMA, and UOF.

There have been a number of publications on the support of parallel implementations, which was missing in this comparative study, particularly from the late 10s onwards.