Criss-cross algorithm

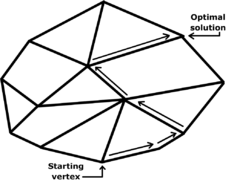

The simplex algorithm first finds a (primal-) feasible basis by solving a "phase-one problem"; in "phase two", the simplex algorithm pivots between a sequence of basic feasible solutions so that the objective function is non-decreasing with each pivot, terminating with an optimal solution (also finally finding a "dual feasible" solution).

[12] Bland's rule uses only signs of coefficients rather than their (real-number) order when deciding eligible pivots.

Bland's rule selects an entering variables by comparing values of reduced costs, using the real-number ordering of the eligible pivots.

[3][11] The criss-cross algorithm has been applied to furnish constructive proofs of basic results in linear algebra, such as the lemma of Farkas.

For example, a generalization of Gaussian elimination called Buchberger's algorithm has for its complexity an exponential function of the problem data (the degree of the polynomials and the number of variables of the multivariate polynomials).

Terlaky's criss-cross algorithm visits all the 2D corners of a (perturbed) cube in dimension D, according to a paper of Roos; Roos's paper modifies the Klee–Minty construction of a cube on which the simplex algorithm takes 2D steps.

There are variants of the criss-cross algorithm for linear programming, for quadratic programming, and for the linear-complementarity problem with "sufficient matrices";[3][6][16][17][18][19] conversely, for linear complementarity problems, the criss-cross algorithm terminates finitely only if the matrix is a sufficient matrix.

[21] Avis and Fukuda presented an algorithm which finds the v vertices of a polyhedron defined by a nondegenerate system of n linear inequalities in D dimensions (or, dually, the v facets of the convex hull of n points in D dimensions, where each facet contains exactly D given points) in time O(nDv) and O(nD) space.

[17][23] Indeed, Bland's pivoting rule was based on his previous papers on oriented-matroid theory.

[17] The criss-cross algorithm and its proof of finite termination can be simply stated and readily extend the setting of oriented matroids.

The partially combinatorial simplex algorithm of Bland cycles on some (nonrealizable) oriented matroids.

Researchers have extended the criss-cross algorithm for many optimization-problems, including linear-fractional programming.

PDF reprint.Rockafellar was influenced by the earlier studies of Albert W. Tucker and George J. Minty.

Tucker and Minty had studied the sign patterns of the matrices arising through the pivoting operations of Dantzig's simplex algorithm.