History of statistics

Computers then produce simple, accurate summaries, and allow more tedious analyses, such as those that require inverting a large matrix or perform hundreds of steps of iteration, that would never be attempted by hand.

Applications arose early in demography and economics; large areas of micro- and macro-economics today are "statistics" with an emphasis on time-series analyses.

With its emphasis on learning from data and making best predictions, statistics also has been shaped by areas of academic research including psychological testing, medicine and epidemiology.

The term statistics is ultimately derived from the Neo-Latin statisticum collegium ("council of state") and the Italian word statista ("statesman" or "politician").

[citation needed] The Trial of the Pyx is a test of the purity of the coinage of the Royal Mint which has been held on a regular basis since the 12th century.

Although the original scope of statistics was limited to data useful for governance, the approach was extended to many fields of a scientific or commercial nature during the 19th century.

The mathematical foundations for the subject heavily drew on the new probability theory, pioneered in the 16th century by Gerolamo Cardano, Pierre de Fermat and Blaise Pascal.

Jakob Bernoulli's Ars Conjectandi (posthumous, 1713) and Abraham de Moivre's The Doctrine of Chances (1718) treated the subject as a branch of mathematics.

Roger Joseph Boscovich in 1755 based in his work on the shape of the earth proposed in his book De Litteraria expeditione per pontificiam ditionem ad dimetiendos duos meridiani gradus a PP.

In 1763 Richard Price transmitted to the Royal Society Thomas Bayes proof of a rule for using a binomial distribution to calculate a posterior probability on a prior event.

Laplace gave (1781) a formula for the law of facility of error (a term due to Joseph Louis Lagrange, 1774), but one which led to unmanageable equations.

Laplace, in an investigation of the motions of Saturn and Jupiter in 1787, generalized Mayer's method by using different linear combinations of a single group of equations.

The method of least squares, which was used to minimize errors in data measurement, was published independently by Adrien-Marie Legendre (1805), Robert Adrain (1808), and Carl Friedrich Gauss (1809).

The term probable error (der wahrscheinliche Fehler) – the median deviation from the mean – was introduced in 1815 by the German astronomer Frederik Wilhelm Bessel.

Antoine Augustin Cournot in 1843 was the first to use the term median (valeur médiane) for the value that divides a probability distribution into two equal halves.

, the "probable error" of a single observation was widely used and inspired early robust statistics (resistant to outliers: see Peirce's criterion).

In the 19th century authors on statistical theory included Laplace, S. Lacroix (1816), Littrow (1833), Dedekind (1860), Helmert (1872), Laurent (1873), Liagre, Didion, De Morgan and Boole.

The first wave, at the turn of the century, was led by the work of Francis Galton and Karl Pearson, who transformed statistics into a rigorous mathematical discipline used for analysis, not just in science, but in industry and politics as well.

The final wave, which mainly saw the refinement and expansion of earlier developments, emerged from the collaborative work between Egon Pearson and Jerzy Neyman in the 1930s.

His contributions to the field included introducing the concepts of standard deviation, correlation, regression and the application of these methods to the study of the variety of human characteristics – height, weight, eyelash length among others.

Galton's publication of Natural Inheritance in 1889 sparked the interest of a brilliant mathematician, Karl Pearson,[29] then working at University College London, and he went on to found the discipline of mathematical statistics.

Egon Pearson (Karl's son) and Jerzy Neyman introduced the concepts of "Type II" error, power of a test and confidence intervals.

[40] Similar one-factor-at-a-time (OFAT) experimentation was performed at the Rothamsted Research Station in the 1840s by Sir John Lawes to determine the optimal inorganic fertilizer for use on wheat.

He began to pay particular attention to the labour involved in the necessary computations performed by hand, and developed methods that were as practical as they were founded in rigour.

[citation needed] Factorial design methodology showed how to estimate and correct for any random variation within the sample and also in the data collection procedures.

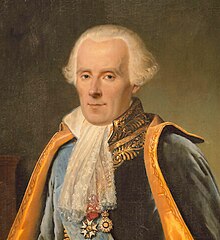

However it was Pierre-Simon Laplace (1749–1827) who introduced (as principle VI) what is now called Bayes' theorem and applied it to celestial mechanics, medical statistics, reliability, and jurisprudence.

After the 1920s, inverse probability was largely supplanted[citation needed] by a collection of methods that were developed by Ronald A. Fisher, Jerzy Neyman and Egon Pearson.

[62][65] In the 20th century, the ideas of Laplace were further developed in two different directions, giving rise to objective and subjective currents in Bayesian practice.

[citation needed] This idea was taken further by Bruno de Finetti in Italy (Fondamenti Logici del Ragionamento Probabilistico, 1930) and Frank Ramsey in Cambridge (The Foundations of Mathematics, 1931).

[68][69] In 1957, Edwin Jaynes promoted the concept of maximum entropy for constructing priors, which is an important principle in the formulation of objective methods, mainly for discrete problems.