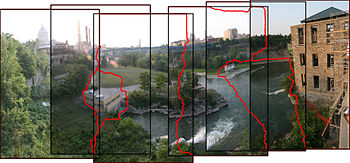

Image stitching

Algorithms that combine direct pixel-to-pixel comparisons with gradient descent (and other optimization techniques) can be used to estimate these parameters.

A final compositing surface onto which to warp or projectively transform and place all of the aligned images is needed, as are algorithms to seamlessly blend the overlapping images, even in the presence of parallax, lens distortion, scene motion, and exposure differences.

Since the illumination in two views cannot be guaranteed to be identical, stitching two images could create a visible seam.

Other major issues to deal with are the presence of parallax, lens distortion, scene motion, and exposure differences.

Additionally, the aspect ratio of a panorama image needs to be taken into account to create a visually pleasing composite.

For panoramic stitching, the ideal set of images will have a reasonable amount of overlap (at least 15–30%) to overcome lens distortion and have enough detectable features.

One of the first operators for interest point detection was developed by Hans P. Moravec in 1977 for his research involving the automatic navigation of a robot through a clustered environment.

They needed it as a processing step to build interpretations of a robot's environment based on image sequences.

Since there are smaller group of features for matching, the result of the search is more accurate and execution of the comparison is faster.

It is an iterative method for robust parameter estimation to fit mathematical models from sets of observed data points which may contain outliers.

The RANSAC algorithm has found many applications in computer vision, including the simultaneous solving of the correspondence problem and the estimation of the fundamental matrix related to a pair of stereo cameras.

The best model – the homography, which produces the highest number of correct matches – is then chosen as the answer for the problem; thus, if the ratio of number of outliers to data points is very low, the RANSAC outputs a decent model fitting the data.

Matthew Brown and David G. Lowe in their paper ‘Automatic Panoramic Image Stitching using Invariant Features’ describe methods of straightening which apply a global rotation such that vector u is vertical (in the rendering frame) which effectively removes the wavy effect from output panoramas.

This process is similar to image rectification, and more generally software correction of optical distortions in single photographs.

Rectilinear projection, where the stitched image is viewed on a two-dimensional plane intersecting the panosphere in a single point.

Wide views – around 120° or so – start to exhibit severe distortion near the image borders.

[14] Stereographic projection or fisheye projection can be used to form a little planet panorama by pointing the virtual camera straight down and setting the field of view large enough to show the whole ground and some of the areas above it; pointing the virtual camera upwards creates a tunnel effect.

The use of images not taken from the same place (on a pivot about the entrance pupil of the camera)[15] can lead to parallax errors in the final product.

When the captured scene features rapid movement or dynamic motion, artifacts may occur as a result of time differences between the image segments.

Dedicated programs include Autostitch, Hugin, Ptgui, Panorama Tools, Microsoft Research Image Composite Editor and CleVR Stitcher.

Many other programs can also stitch multiple images; a popular example is Adobe Systems' Photoshop, which includes a tool known as Photomerge and, in the latest versions, the new Auto-Blend.

( view as a 360° interactive panorama )