Information Age

[8] Humans have manufactured tools for counting and calculating since ancient times, such as the abacus, astrolabe, equatorium, and mechanical timekeeping devices.

In the 1880s, Herman Hollerith developed electromechanical tabulating and calculating devices using punch cards and unit record equipment, which became widespread in business and government.

The construction of problem-specific analog computers continued in the late 1940s and beyond, with FERMIAC for neutron transport, Project Cyclone for various military applications, and the Phillips Machine for economic modeling.

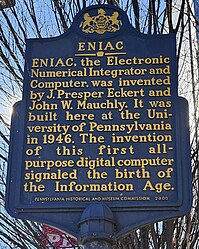

Building on the complexity of the Z1 and Z2, German inventor Konrad Zuse used electromechanical systems to complete in 1941 the Z3, the world's first working programmable, fully automatic digital computer.

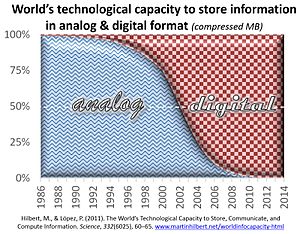

Claude Shannon, a Bell Labs mathematician, is credited for having laid out the foundations of digitalization in his pioneering 1948 article, A Mathematical Theory of Communication.

The T1 format carried 24 pulse-code modulated, time-division multiplexed speech signals each encoded in 64 kbit/s streams, leaving 8 kbit/s of framing information which facilitated the synchronization and demultiplexing at the receiver.

[23] The first such image sensor was the charge-coupled device, developed by Willard S. Boyle and George E. Smith at Bell Labs in 1969,[24] based on MOS capacitor technology.

Automated teller machines, industrial robots, CGI in film and television, electronic music, bulletin board systems, and video games all fueled what became the zeitgeist of the 1980s.

[citation needed] However throughout the 1990s, "getting online" entailed complicated configuration, and dial-up was the only connection type affordable by individual users; the present day mass Internet culture was not possible.

[61] He advocated replacing bulky, decaying printed works with miniaturized microform analog photographs, which could be duplicated on-demand for library patrons and other institutions.

[52] An article featured in the journal Trends in Ecology and Evolution in 2016 reported that:[53] Digital technology has vastly exceeded the cognitive capacity of any single human being and has done so a decade earlier than predicted.

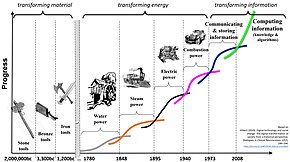

The Scientific Age began in the period between Galileo's 1543 proof that the planets orbit the Sun and Newton's publication of the laws of motion and gravity in Principia in 1697.

The invention of machines such as the mechanical textile weaver by Edmund Cartwrite, the rotating shaft steam engine by James Watt and the cotton gin by Eli Whitney, along with processes for mass manufacturing, came to serve the needs of a growing global population.

The Industrial Age harnessed steam and waterpower to reduce the dependence on animal and human physical labor as the primary means of production.

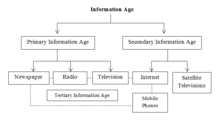

However, what dramatically accelerated the pace of The Information Age’s adoption, as compared to previous ones, was the speed by which knowledge could be transferred and pervaded the entire human family in a few short decades.

It started out with the proliferation of communication and stored data and has now entered the age of algorithms, which aims at creating automated processes to convert the existing information into actionable knowledge.

[88] Veneris (1984, 1990) explored the theoretical, economic and regional aspects of the informational revolution and developed a systems dynamics simulation computer model.

Thus, individuals who lose their jobs may be pressed to move up into more indispensable professions (e.g. engineers, doctors, lawyers, teachers, professors, scientists, executives, journalists, consultants), who are able to compete successfully in the world market and receive (relatively) high wages.

[citation needed] Along with automation, jobs traditionally associated with the middle class (e.g. assembly line, data processing, management, and supervision) have also begun to disappear as result of outsourcing.

With the advent of the Information Age and improvements in communication, this is no longer the case, as workers must now compete in a global job market, whereby wages are less dependent on the success or failure of individual economies.

[95] In effectuating a globalized workforce, the internet has just as well allowed for increased opportunity in developing countries, making it possible for workers in such places to provide in-person services, therefore competing directly with their counterparts in other nations.

[96] The Information Age has affected the workforce in that automation and computerization have resulted in higher productivity coupled with net job loss in manufacturing.

[105] Before the advent of electronics, mechanical computers, like the Analytical Engine in 1837, were designed to provide routine mathematical calculation and simple decision-making capabilities.

The first commercial single-chip microprocessor launched in 1971, the Intel 4004, which was developed by Federico Faggin using his silicon-gate MOS IC technology, along with Marcian Hoff, Masatoshi Shima and Stan Mazor.

Early information theory and Hamming codes were developed about 1950, but awaited technical innovations in data transmission and storage to be put to full use.

[115][116] In 1967, Dawon Kahng and Simon Sze at Bell Labs described in 1967 how the floating gate of an MOS semiconductor device could be used for the cell of a reprogrammable ROM.

[120][117] Copper wire cables transmitting digital data connected computer terminals and peripherals to mainframes, and special message-sharing systems leading to email, were first developed in the 1960s.

It had color graphics, a full QWERTY keyboard, and internal slots for expansion, which were mounted in a high quality streamlined plastic case.

Optical communication provides the transmission backbone for the telecommunications and computer networks that underlie the Internet, the foundation for the Digital Revolution and Information Age.

The Information Age's emphasis on speed over expertise contributes to "superficial culture in which even the elite will openly disparage as pointless our main repositories for the very best that has been thought.