Lasso (statistics)

The lasso method assumes that the coefficients of the linear model are sparse, meaning that few of them are non-zero.

These include its relationship to ridge regression and best subset selection and the connections between lasso coefficient estimates and so-called soft thresholding.

[3][4] Lasso's ability to perform subset selection relies on the form of the constraint and has a variety of interpretations including in terms of geometry, Bayesian statistics and convex analysis.

Lasso was introduced in order to improve the prediction accuracy and interpretability of regression models.

Statistician Robert Tibshirani independently rediscovered and popularized it in 1996, based on Breiman's nonnegative garrote.

That approach only improves prediction accuracy in certain cases, such as when only a few covariates have a strong relationship with the outcome.

fixed, gives a new solution, so the lasso objective function then has a continuum of valid minimizers.

[9] Several variants of the lasso, including the Elastic net regularization, have been designed to address this shortcoming.

norm is a square rotated so that its corners lie on the axes (in general a cross-polytope), while the region defined by the

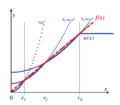

As seen in the figure, a convex object that lies tangent to the boundary, such as the line shown, is likely to encounter a corner (or a higher-dimensional equivalent) of a hypercube, for which some components of

We can then define the Lagrangian as a tradeoff between the in-sample accuracy of the data-optimized solutions and the simplicity of sticking to the hypothesized values.

measures in percentage terms the minimal amount of influence of the hypothesized value relative to the data-optimized OLS solution.

This provides an alternative explanation of why lasso tends to set some coefficients to zero, while ridge regression does not.

Lasso variants have been created in order to remedy limitations of the original technique and to make the method more useful for particular problems.

Elastic net regularization adds an additional ridge regression-like penalty that improves performance when the number of predictors is larger than the sample size, allows the method to select strongly correlated variables together, and improves overall prediction accuracy.

[15][16] Fused lasso can account for the spatial or temporal characteristics of a problem, resulting in estimates that better match system structure.

Determining the optimal value for the regularization parameter is an important part of ensuring that the model performs well; it is typically chosen using cross-validation.

Returning to the general case, the fact that the penalty function is now strictly convex means that if

This phenomenon, in which strongly correlated covariates have similar regression coefficients, is referred to as the grouping effect.

[9] In addition, selecting only one from each group typically results in increased prediction error, since the model is less robust (which is why ridge regression often outperforms lasso).

In this case, group lasso can ensure that all the variables encoding the categorical covariate are included or excluded together.

[18] In some cases, the phenomenon under study may have important spatial or temporal structure that must be considered during analysis, such as time series or image-based data.

The efficient algorithm for minimization is based on piece-wise quadratic approximation of subquadratic growth (PQSQ).

The procedure involves running lasso on each of several random subsets of the data and collating the results.

LARS is a method that is closely tied to lasso models, and in many cases allows them to be fit efficiently, though it may not perform well in all circumstances.

A good value is essential to the performance of lasso since it controls the strength of shrinkage and variable selection, which, in moderation can improve both prediction accuracy and interpretability.

However, if the regularization becomes too strong, important variables may be omitted and coefficients may be shrunk excessively, which can harm both predictive capacity and inferencing.

[38] An information criterion selects the estimator's regularization parameter by maximizing a model's in-sample accuracy while penalizing its effective number of parameters/degrees of freedom.

Zou et al. proposed to measure the effective degrees of freedom by counting the number of parameters that deviate from zero.

As an alternative, the relative simplicity measure defined above can be used to count the effective number of parameters.