No free lunch in search and optimization

For such probabilistic assumptions, the outputs of all procedures solving a particular type of problem are statistically identical.

A colourful way of describing such a circumstance, introduced by David Wolpert and William G. Macready in connection with the problems of search[1] and optimization,[2] is to say that there is no free lunch.

Wolpert had previously derived no free lunch theorems for machine learning (statistical inference).

For an omnivore who is as likely to order each plate as any other, the average cost of lunch does not depend on the choice of restaurant.

That is, improvement of performance in problem-solving hinges on using prior information to match procedures to problems.

There is no free lunch in search if and only if the distribution on objective functions is invariant under permutation of the space of candidate solutions.

A description of how to repeatedly select candidate solutions for evaluation is called a search algorithm.

[citation needed] Igel and Toussaint[6] and English[7] have established a general condition under which there is no free lunch.

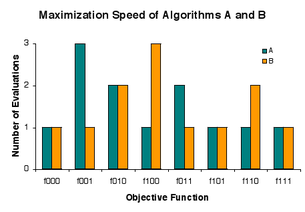

[6] Droste, Jansen, and Wegener have proved a theorem they interpret as indicating that there is "(almost) no free lunch" in practice.

[8] A "problem" is, more formally, an objective function that associates candidate solutions with goodness values.

A search algorithm takes an objective function as input and evaluates candidate solutions one-by-one.

Indeed, there seems to be no interesting application of search algorithms in the class under consideration but to optimization problems.

[7] The original no free lunch (NFL) theorems assume that all objective functions are equally likely to be input to search algorithms.

[2] It has since been established that there is NFL if and only if, loosely speaking, "shuffling" objective functions has no impact on their probabilities.

Accordingly, a search algorithm will rarely evaluate more than a small fraction of the candidates before locating a very good solution.

There is more information in the typical objective function or algorithm than Seth Lloyd estimates the observable universe is capable of registering.

[6][7] In other words, there is no free lunch for search algorithms if and only if the distribution of objective functions is invariant under permutation of the solution space.

While the NFL results constitute, in a strict sense, full employment theorems for optimization professionals, it is important to bear the larger context in mind.

The NFL results do not indicate that it is futile to take "pot shots" at problems with unspecialized algorithms.

No one has determined the fraction of practical problems for which an algorithm yields good results rapidly.

If an algorithm succeeds in finding a satisfactory solution in an acceptable amount of time, a small investment has yielded a big payoff.

Recently some philosophers of science have argued that there are ways to circumvent the no free lunch theorems, by using "meta-induction".

In these problems, the set of players work together to produce a champion, who then engages one or more antagonists in a subsequent multiplayer game.