Particle swarm optimization

PSO is originally attributed to Kennedy, Eberhart and Shi[2][3] and was first intended for simulating social behaviour,[4] as a stylized representation of the movement of organisms in a bird flock or fish school.

The book by Kennedy and Eberhart[5] describes many philosophical aspects of PSO and swarm intelligence.

[6][7] In 2017, a comprehensive review on theoretical and experimental works on PSO has been published by Bonyadi and Michalewicz.

[1] PSO is a metaheuristic as it makes few or no assumptions about the problem being optimized and can search very large spaces of candidate solutions.

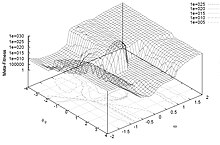

A basic PSO algorithm to minimize the cost function is then:[9] The values blo and bup represent the lower and upper boundaries of the search-space respectively.

The termination criterion can be the number of iterations performed, or a solution where the adequate objective function value is found.

[10] The parameters w, φp, and φg are selected by the practitioner and control the behaviour and efficacy of the PSO method (below).

The two other parameters can be then derived thanks to the constriction approach,[16] or freely selected, but the analyses suggest convergence domains to constrain them.

However, this approach might lead the swarm to be trapped into a local minimum,[29] thus different topologies have been used to control the flow of information among particles.

[3][4][12][16] This school of thought contends that the PSO algorithm and its parameters must be chosen so as to properly balance between exploration and exploitation to avoid premature convergence to a local optimum yet still ensure a good rate of convergence to the optimum.

This school of thought merely tries to find PSO algorithms and parameters that cause good performance regardless of how the swarm behaviour can be interpreted in relation to e.g. exploration and exploitation.

However, it was shown[39] that these simplifications do not affect the boundaries found by these studies for parameter where the swarm is convergent.

Considerable effort has been made in recent years to weaken the modeling assumption utilized during the stability analysis of PSO,[40] with the most recent generalized result applying to numerous PSO variants and utilized what was shown to be the minimal necessary modeling assumptions.

This means that determining the convergence capabilities of different PSO algorithms and parameters still depends on empirical results.

One attempt at addressing this issue is the development of an "orthogonal learning" strategy for an improved use of the information already existing in the relationship between p and g, so as to form a leading converging exemplar and to be effective with any PSO topology.

The aims are to improve the performance of PSO overall, including faster global convergence, higher solution quality, and stronger robustness.

Adaptive particle swarm optimization (APSO) [45] features better search efficiency than standard PSO.

Besides, through the utilization of a scale-adaptive fitness evaluation mechanism, PSO can efficiently address computationally expensive optimization problems.

[14] A series of standard implementations have been created by leading researchers, "intended for use both as a baseline for performance testing of improvements to the technique, as well as to represent PSO to the wider optimization community.

Having a well-known, strictly-defined standard algorithm provides a valuable point of comparison which can be used throughout the field of research to better test new advances.

[47] New and more sophisticated PSO variants are also continually being introduced in an attempt to improve optimization performance.

[44] Another research trend is to try to alleviate premature convergence (that is, optimization stagnation), e.g. by reversing or perturbing the movement of the PSO particles,[19][52][53][54] another approach to deal with premature convergence is the use of multiple swarms[55] (multi-swarm optimization).

[45][24] Another school of thought is that PSO should be simplified as much as possible without impairing its performance; a general concept often referred to as Occam's razor.

Another argument in favour of simplifying PSO is that metaheuristics can only have their efficacy demonstrated empirically by doing computational experiments on a finite number of optimization problems.

This means a metaheuristic such as PSO cannot be proven correct and this increases the risk of making errors in its description and implementation.

A good example of this[58] presented a promising variant of a genetic algorithm (another popular metaheuristic) but it was later found to be defective as it was strongly biased in its optimization search towards similar values for different dimensions in the search space, which happened to be the optimum of the benchmark problems considered.

Another simpler variant is the accelerated particle swarm optimization (APSO),[61] which also does not need to use velocity and can speed up the convergence in many applications.

PSO has also been applied to multi-objective problems,[63][64][65] in which the objective function comparison takes Pareto dominance into account when moving the PSO particles and non-dominated solutions are stored so as to approximate the pareto front.

As the PSO equations given above work on real numbers, a commonly used method to solve discrete problems is to map the discrete search space to a continuous domain, to apply a classical PSO, and then to demap the result.

But all these mathematical objects can be defined in a completely different way, in order to cope with binary problems (or more generally discrete ones), or even combinatorial ones.