Philosophy of artificial intelligence

AI founder John McCarthy defined intelligence as "the computational part of the ability to achieve goals in the world.

[24] This argument, first introduced as early as 1943[25] and vividly described by Hans Moravec in 1988,[26] is now associated with futurist Ray Kurzweil, who estimates that computer power will be sufficient for a complete brain simulation by the year 2029.

Even AI's harshest critics (such as Hubert Dreyfus and John Searle) agree that a brain simulation is possible in theory.

[32] In 1963, Allen Newell and Herbert A. Simon proposed that "symbol manipulation" was the essence of both human and machine intelligence.

Modern AI, based on statistics and mathematical optimization, does not use the high-level "symbol processing" that Newell and Simon discussed.

"[40] Stuart Russell and Peter Norvig agree that Gödel's argument does not consider the nature of real-world human reasoning.

[41] Less formally, Douglas Hofstadter, in his Pulitzer Prize winning book Gödel, Escher, Bach: An Eternal Golden Braid, states that these "Gödel-statements" always refer to the system itself, drawing an analogy to the way the Epimenides paradox uses statements that refer to themselves, such as "this statement is false" or "I am lying".

'"[49] Russell and Norvig point out that, in the years since Dreyfus published his critique, progress has been made towards discovering the "rules" that govern unconscious reasoning.

[51] Computational intelligence paradigms, such as neural nets, evolutionary algorithms and so on are mostly directed at simulated unconscious reasoning and learning.

In fact, AI research in general has moved away from high level symbol manipulation, towards new models that are intended to capture more of our intuitive reasoning.

Historian and AI researcher Daniel Crevier wrote that "time has proven the accuracy and perceptiveness of some of Dreyfus's comments.

[10] Neither of Searle's two positions are of great concern to AI research, since they do not directly answer the question "can a machine display general intelligence?"

Some new age thinkers, for example, use the word "consciousness" to describe something similar to Bergson's "élan vital": an invisible, energetic fluid that permeates life and especially the mind.

[57] What is mysterious and fascinating is not so much what it is but how it is: how does a lump of fatty tissue and electricity give rise to this (familiar) experience of perceiving, meaning or thinking?

[59] Neurobiologists believe all these problems will be solved as we begin to identify the neural correlates of consciousness: the actual relationship between the machinery in our heads and its collective properties; such as the mind, experience and understanding.

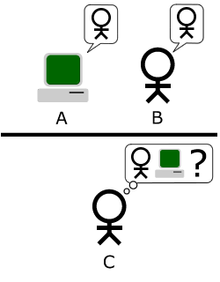

John Searle asks us to consider a thought experiment: suppose we have written a computer program that passes the Turing test and demonstrates general intelligent action.

"[63] Gottfried Leibniz made essentially the same argument as Searle in 1714, using the thought experiment of expanding the brain until it was the size of a mill.

The idea has philosophical roots in Hobbes (who claimed reasoning was "nothing more than reckoning"), Leibniz (who attempted to create a logical calculus of all human ideas), Hume (who thought perception could be reduced to "atomic impressions") and even Kant (who analyzed all experience as controlled by formal rules).

"), some versions of computationalism make the claim that (as Hobbes wrote): In other words, our intelligence derives from a form of calculation, similar to arithmetic.

"[75] Daniel Crevier writes "Moravec's point is that emotions are just devices for channeling behavior in a direction beneficial to the survival of one's species.

Vernor Vinge has suggested that over just a few years, computers will suddenly become thousands or millions of times more intelligent than humans.

[84] The US Navy has funded a report which indicates that as military robots become more complex, there should be greater attention to implications of their ability to make autonomous decisions.

[88] Turing said "It is customary ... to offer a grain of comfort, in the form of a statement that some peculiarly human characteristic could never be imitated by a machine.

[77]Turing argues that these objections are often based on naive assumptions about the versatility of machines or are "disguised forms of the argument from consciousness".

"[77] All of these arguments are tangential to the basic premise of AI, unless it can be shown that one of these traits is essential for general intelligence.

[90]The discussion on the topic has been reignited as a result of recent claims made by Google's LaMDA artificial intelligence system that it is sentient and had a "soul".

[91] LaMDA (Language Model for Dialogue Applications) is an artificial intelligence system that creates chatbots—AI robots designed to communicate with humans—by gathering vast amounts of text from the internet and using algorithms to respond to queries in the most fluid and natural way possible.

The transcripts of conversations between scientists and LaMDA reveal that the AI system excels at this, providing answers to challenging topics about the nature of emotions, generating Aesop-style fables on the moment, and even describing its alleged fears.

[5] Physicist David Deutsch argues that without an understanding of philosophy or its concepts, AI development would suffer from a lack of progress.

[94] The main conference series on the issue is "Philosophy and Theory of AI" (PT-AI), run by Vincent C. Müller.