Strassen algorithm

Strassen's algorithm works for any ring, such as plus/multiply, but not all semirings, such as min-plus or boolean algebra, where the naive algorithm still works, and so called combinatorial matrix multiplication.

[1] The Strassen algorithm's publication resulted in more research about matrix multiplication that led to both asymptotically lower bounds and improved computational upper bounds.

, the "missing" rows and columns can be filled with zeros to obtain matrices with sizes of powers of two — though real implementations of the algorithm do not do this in practice.

: We recursively iterate this division process until the submatrices degenerate into numbers (elements of the ring

If, as mentioned above, the original matrix had a size that was not a power of 2, then the resulting product will have zero rows and columns just like

The particular crossover point for which Strassen's algorithm is more efficient depends on the specific implementation and hardware.

Earlier authors had estimated that Strassen's algorithm is faster for matrices with widths from 32 to 128 for optimized implementations.

[2] However, it has been observed that this crossover point has been increasing in recent years, and a 2010 study found that even a single step of Strassen's algorithm is often not beneficial on current architectures, compared to a highly optimized traditional multiplication, until matrix sizes exceed 1000 or more, and even for matrix sizes of several thousand the benefit is typically marginal at best (around 10% or less).

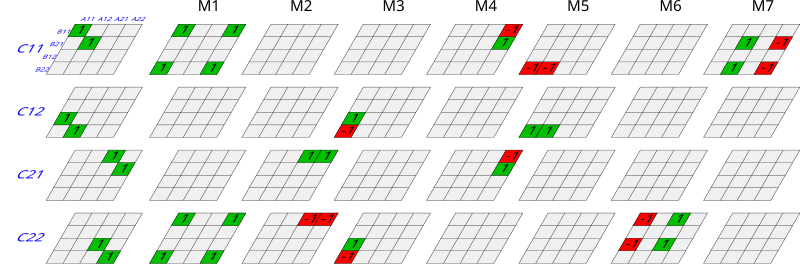

[4] It is possible to reduce the number of matrix additions by instead using the following form discovered by Winograd in 1971:[5]

The outline of the algorithm above showed that one can get away with just 7, instead of the traditional 8, matrix-matrix multiplications for the sub-blocks of the matrix.

On the other hand, one has to do additions and subtractions of blocks, though this is of no concern for the overall complexity: Adding matrices of size

The question then is how many operations exactly one needs for Strassen's algorithms, and how this compares with the standard matrix multiplication that takes approximately

The number of additions and multiplications required in the Strassen algorithm can be calculated as follows: let

The reduction in the number of arithmetic operations however comes at the price of a somewhat reduced numerical stability,[9] and the algorithm also requires significantly more memory compared to the naive algorithm.

Both initial matrices must have their dimensions expanded to the next power of 2, which results in storing up to four times as many elements, and the seven auxiliary matrices each contain a quarter of the elements in the expanded ones.

This would then give rise to the complexity one expects from the standard approach:

so that matrices that are larger are more efficiently multiplied with Strassen's algorithm than the "traditional" way.

However, the asymptotic statement does not imply that Strassen's algorithm is always faster even for small matrices, and in practice this is in fact not the case: For small matrices, the cost of the additional additions of matrix blocks outweighs the savings in the number of multiplications.

As a consequence of these sorts of considerations, Strassen's algorithm is typically only used on "large" matrices.

This kind of effect is even more pronounced with alternative algorithms such as the one by Coppersmith and Winograd: While asymptotically even faster, the cross-over point

In the case of matrices, the dual spaces A* and B* consist of maps into the field F induced by a scalar double-dot product, (i.e. in this case the sum of all the entries of a Hadamard product.)

required for matrix multiplication is tightly asymptotically bound to the rank

One useful property of the rank is that it is submultiplicative for tensor products, and this enables one to show that

[11]: 13 The description above states that the matrices are square, and the size is a power of two, and that padding should be used if needed.

This restriction allows the matrices to be split in half, recursively, until limit of scalar multiplication is reached.

The restriction simplifies the explanation, and analysis of complexity, but is not actually necessary;[12] and in fact, padding the matrix as described will increase the computation time and can easily eliminate the fairly narrow time savings obtained by using the method in the first place.

If the matrices are sufficiently non-square it will be worthwhile reducing the initial operation to more square products, using simple methods which are essentially

, for instance: These techniques will make the implementation more complicated, compared to simply padding to a power-of-two square; however, it is a reasonable assumption that anyone undertaking an implementation of Strassen, rather than conventional multiplication, will place a higher priority on computational efficiency than on simplicity of the implementation.

In practice, Strassen's algorithm can be implemented to attain better performance than conventional multiplication even for matrices as small as

, for matrices that are not at all square, and without requiring workspace beyond buffers that are already needed for a high-performance conventional multiplication.