tf–idf

In information retrieval, tf–idf (also TF*IDF, TFIDF, TF–IDF, or Tf–idf), short for term frequency–inverse document frequency, is a measure of importance of a word to a document in a collection or corpus, adjusted for the fact that some words appear more frequently in general.

It is a refinement over the simple bag-of-words model, by allowing the weight of words to depend on the rest of the corpus.

It was often used as a weighting factor in searches of information retrieval, text mining, and user modeling.

[2] Variations of the tf–idf weighting scheme were often used by search engines as a central tool in scoring and ranking a document's relevance given a user query.

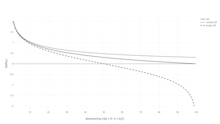

Karen Spärck Jones (1972) conceived a statistical interpretation of term-specificity called Inverse Document Frequency (idf), which became a cornerstone of term weighting:[3] The specificity of a term can be quantified as an inverse function of the number of documents in which it occurs.For example, the df (document frequency) and idf for some words in Shakespeare's 37 plays are as follows:[4] We see that "Romeo", "Falstaff", and "salad" appears in very few plays, so seeing these words, one could get a good idea as to which play it might be.

As a term appears in more documents, the ratio inside the logarithm approaches 1, bringing the idf and tf–idf closer to 0.

Suppose that we have term count tables of a corpus consisting of only two documents, as listed on the right.

An idf is constant per corpus, and accounts for the ratio of documents that include the word "this".

The word "example" is more interesting - it occurs three times, but only in the second document: Finally, (using the base 10 logarithm).

In addition, tf–idf was applied to "visual words" with the purpose of conducting object matching in videos,[11] and entire sentences.

When tf–idf was applied to citations, researchers could find no improvement over a simple citation-count weight that had no idf component.

The authors report that TF–IDuF was equally effective as tf–idf but could also be applied in situations when, e.g., a user modeling system has no access to a global document corpus.