Bi-directional hypothesis of language and action

In addition, the reverse effect is argued, where it is proposed that language comprehension influences movement and sensation.

This means that language stimuli influence both electrical activity in sensorimotor areas of the brain, as well as actual movement.

Language stimuli influence electrical activity in sensorimotor areas of the brain that are specific to the bodily association of the words presented.

This is referred to as semantic somatotopy, which indicates activation of sensorimotor areas that are specific to the bodily association implied by the word.

For example, when processing the meaning of the word “kick,” the regions in the motor and somatosensory cortices that represent the legs will become more active.

[4][5] Boulenger et al.[5] demonstrated this effect by presenting subjects with action-related language while measuring neural activity using fMRI.

[5] This body-part-specific increase in activation was exhibited about 3 seconds after presentation of the word, a time window that is thought to indicate semantic processing.

[5] Abstract language that implied more figurative actions were used, either associated with the legs (e.g. “John kicked the habit”) or the arms (e.g. “Jane grasped the idea”).

[5] This activation was larger than that demonstrated by more literal sentences (e.g. “John kicked the object”), and was also present in the time window associated with semantic processing.

This has been demonstrated during an Action-Sentence Compatibility Effect (ACE) task, a common test used to study the relationship between language comprehension and motor behavior.

The ability of language to influence neural activity of motor systems also manifests itself behaviorally by altering movement.

Semantic priming has been implicated in these behavioral changes, and has been used as evidence for the involvement of the motor system in language comprehension.

[1][7][13] This effect has been demonstrated on various types of movements, including hand posture during button pressing,[1] reaching,[7] and manual rotation.

In a study performed by Masson et al., subjects were presented with sentences that implied non-physical, abstract action with an object (e.g. "John thought about the calculator" or "Jane remembered the thumbtack").

[14] Target gestures were either compatible or incompatible with the described object, and were cued at two different time points, early and late.

A study performed by Dr. Olmstead et al.,[15] described in detail elsewhere, demonstrates more concretely the influence that the semantics of action language can have on movement coordination.

Briefly, this study investigated the effects of action language on the coordination of rhythmic bimanual hand movements.

Plausible, performable sentences lead to a significant change in the relative phase shift of the bimanual pendulum task.

[17] During the stimulation protocols, subjects were shown 50 arm, 50 leg, 50 distractor (no bodily relation), and 100 pseudo- (not real) words.

[17] Subjects were asked to indicate recognition of a meaningful word by moving their lips, and response times were measured.

[19] This patient showcases significant impairments in conceptual and perceptual processing of sound-related language and objects.

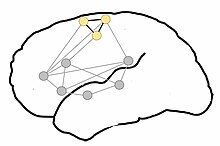

[23] Thought circuits are believed to have been originally formed from basic anatomical connections, that were strengthened with correlated activity through Hebbian learning and plasticity.

[23] Formation of these neural networks has been demonstrated with computational models using known anatomical connections and Hebbian learning principles.

[23][24] This leads to semantic topography, or the activation of motor areas related to the meaning and bodily association of action language.

[26] Using this theory, proponents of the bi-directional hypothesis have postulated that performance of verbal working memory of action words would be impaired by movement of the concordant body part.