Boltzmann machine

[3] Boltzmann machines are theoretically intriguing because of the locality and Hebbian nature of their training algorithm (being trained by Hebb's rule), and because of their parallelism and the resemblance of their dynamics to simple physical processes.

Boltzmann machines with unconstrained connectivity have not been proven useful for practical problems in machine learning or inference, but if the connectivity is properly constrained, the learning can be made efficient enough to be useful for practical problems.

[4] They are named after the Boltzmann distribution in statistical mechanics, which is used in their sampling function.

They were heavily popularized and promoted by Geoffrey Hinton, Terry Sejnowski and Yann LeCun in cognitive sciences communities, particularly in machine learning,[2] as part of "energy-based models" (EBM), because Hamiltonians of spin glasses as energy are used as a starting point to define the learning task.

in a Boltzmann machine is identical in form to that of Hopfield networks and Ising models: Where: Often the weights

This relation is the source of the logistic function found in probability expressions in variants of the Boltzmann machine.

This relationship is true when the machine is "at thermal equilibrium", meaning that the probability distribution of global states has converged.

The distribution over global states converges as the Boltzmann machine reaches thermal equilibrium.

This is more biologically realistic than the information needed by a connection in many other neural network training algorithms, such as backpropagation.

Therefore, the training procedure performs gradient ascent on the log-likelihood of the observed data.

This is in contrast to the EM algorithm, where the posterior distribution of the hidden nodes must be calculated before the maximization of the expected value of the complete data likelihood during the M-step.

Training the biases is similar, but uses only single node activity: Theoretically the Boltzmann machine is a rather general computational medium.

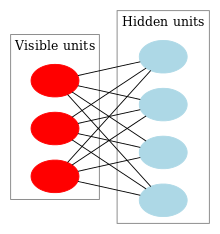

[citation needed] This is due to important effects, specifically: Although learning is impractical in general Boltzmann machines, it can be made quite efficient in a restricted Boltzmann machine (RBM) which does not allow intralayer connections between hidden units and visible units, i.e. there is no connection between visible to visible and hidden to hidden units.

This method of stacking RBMs makes it possible to train many layers of hidden units efficiently and is one of the most common deep learning strategies.

[7] A deep Boltzmann machine (DBM) is a type of binary pairwise Markov random field (undirected probabilistic graphical model) with multiple layers of hidden random variables.

Like DBNs, DBMs can learn complex and abstract internal representations of the input in tasks such as object or speech recognition, using limited, labeled data to fine-tune the representations built using a large set of unlabeled sensory input data.

However, unlike DBNs and deep convolutional neural networks, they pursue the inference and training procedure in both directions, bottom-up and top-down, which allow the DBM to better unveil the representations of the input structures.

[8] This approximate inference, which must be done for each test input, is about 25 to 50 times slower than a single bottom-up pass in DBMs.

This makes joint optimization impractical for large data sets, and restricts the use of DBMs for tasks such as feature representation.

[12] Similar to basic RBMs and its variants, a spike-and-slab RBM is a bipartite graph, while like GRBMs, the visible units (input) are real-valued.

A spike is a discrete probability mass at zero, while a slab is a density over continuous domain;[13] their mixture forms a prior.

[14] An extension of ssRBM called μ-ssRBM provides extra modeling capacity using additional terms in the energy function.

The Boltzmann machine is based on the Sherrington–Kirkpatrick spin glass model by David Sherrington and Scott Kirkpatrick.

[15] The seminal publication by John Hopfield (1982) applied methods of statistical mechanics, mainly the recently developed (1970s) theory of spin glasses, to study associative memory (later named the "Hopfield network").

[16] The original contribution in applying such energy-based models in cognitive science appeared in papers by Geoffrey Hinton and Terry Sejnowski.

[17][18][19] In a 1995 interview, Hinton stated that in 1983 February or March, he was going to give a talk on simulated annealing in Hopfield networks, so he had to design a learning algorithm for the talk, resulting in the Boltzmann machine learning algorithm.

[20] The idea of applying the Ising model with annealed Gibbs sampling was used in Douglas Hofstadter's Copycat project (1984).

[21][22] The explicit analogy drawn with statistical mechanics in the Boltzmann machine formulation led to the use of terminology borrowed from physics (e.g., "energy"), which became standard in the field.

Similar ideas (with a change of sign in the energy function) are found in Paul Smolensky's "Harmony Theory".

[23] Ising models can be generalized to Markov random fields, which find widespread application in linguistics, robotics, computer vision and artificial intelligence.