Characteristic function (probability theory)

There are particularly simple results for the characteristic functions of distributions defined by the weighted sums of random variables.

In addition to univariate distributions, characteristic functions can be defined for vector- or matrix-valued random variables, and can also be extended to more generic cases.

Another important application is to the theory of the decomposability of random variables.

as the characteristic function corresponding to a density f. The notion of characteristic functions generalizes to multivariate random variables and more complicated random elements.

The argument of the characteristic function will always belong to the continuous dual of the space where the random variable X takes its values.

For common cases such definitions are listed below: Oberhettinger (1973) provides extensive tables of characteristic functions.

The bijection stated above between probability distributions and characteristic functions is sequentially continuous.

That is, whenever a sequence of distribution functions Fj(x) converges (weakly) to some distribution F(x), the corresponding sequence of characteristic functions φj(t) will also converge, and the limit φ(t) will correspond to the characteristic function of law F. More formally, this is stated as This theorem can be used to prove the law of large numbers and the central limit theorem.

The density function is the Radon–Nikodym derivative of the distribution μX with respect to the Lebesgue measure λ:

[note 1] If φX is characteristic function of distribution function FX, two points a < b are such that {x | a < x < b} is a continuity set of μX (in the univariate case this condition is equivalent to continuity of FX at points a and b), then Theorem.

If a is (possibly) an atom of X (in the univariate case this means a point of discontinuity of FX) then Theorem (Gil-Pelaez).

[16] For a univariate random variable X, if x is a continuity point of FX then where the imaginary part of a complex number

Other theorems also exist, such as Khinchine’s, Mathias’s, or Cramér’s, although their application is just as difficult.

Pólya’s theorem, on the other hand, provides a very simple convexity condition which is sufficient but not necessary.

Characteristic functions which satisfy this condition are called Pólya-type.

For example, if X1, X2, ..., Xn is a sequence of independent (and not necessarily identically distributed) random variables, and where the ai are constants, then the characteristic function for Sn is given by In particular, φX+Y(t) = φX(t)φY(t).

To see this, write out the definition of characteristic function: The independence of X and Y is required to establish the equality of the third and fourth expressions.

Another special case of interest for identically distributed random variables is when ai = 1 / n and then Sn is the sample mean.

In this case, writing X for the mean, Characteristic functions can also be used to find moments of a random variable.

Provided that the n-th moment exists, the characteristic function can be differentiated n times:

This can be formally written using the derivatives of the Dirac delta function:

and is easier to carry out than applying the definition of expectation and using integration by parts to evaluate

Characteristic functions can be used as part of procedures for fitting probability distributions to samples of data.

Cases where this provides a practicable option compared to other possibilities include fitting the stable distribution since closed form expressions for the density are not available which makes implementation of maximum likelihood estimation difficult.

Paulson et al. (1975)[19] and Heathcote (1977)[20] provide some theoretical background for such an estimation procedure.

In addition, Yu (2004)[21] describes applications of empirical characteristic functions to fit time series models where likelihood procedures are impractical.

The characteristic functions are which by independence and the basic properties of characteristic function leads to This is the characteristic function of the gamma distribution scale parameter θ and shape parameter k1 + k2, and we therefore conclude The result can be expanded to n independent gamma distributed random variables with the same scale parameter and we get As defined above, the argument of the characteristic function is treated as a real number: however, certain aspects of the theory of characteristic functions are advanced by extending the definition into the complex plane by analytic continuation, in cases where this is possible.

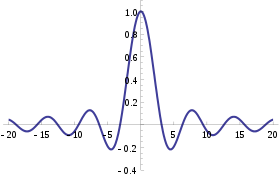

The characteristic function is closely related to the Fourier transform: the characteristic function of a probability density function p(x) is the complex conjugate of the continuous Fourier transform of p(x) (according to the usual convention; see continuous Fourier transform – other conventions).

where P(t) denotes the continuous Fourier transform of the probability density function p(x).

Likewise, p(x) may be recovered from φX(t) through the inverse Fourier transform: Indeed, even when the random variable does not have a density, the characteristic function may be seen as the Fourier transform of the measure corresponding to the random variable.